[Java][Profiling] Java/JVM native memory leaks

In this article, I would like to tackle the native memory leaks problem. Usually such a problem

starts with some monitoring system that alerts about high memory (RAM) utilization. We start the investigation and

we see that our Java process has eaten 8GB of RAM with Xmx/Xms set to 4GB. How is that possible?

Well, the heap is not the only memory needed for Java applications to run. Other pretty obvious memory areas are:

- Threads - each thread needs some native memory for its data

- Metaspace - for our class definitions

- Code cache/code heap - for JIT compiler output

- …

NMT

JVM has a dedicated feature to track where the memory is needed. It’s called “Native memory tracking” (NMT) and is disabled by default. There are two modes of NMT:

- Summary - This mode tracks the number of bytes allocated by JVM in each of the NMT category

- Detail - This one gathers the same data as the summary + keeps track of which part of the code needs that memory

There are many rumors and misconceptions about NMT overhead. In the Oracle documentation you can find the following:

Enabling NMT will result in 5-10 percent JVM performance drop and memory usage for NMT adds 2 words to all malloc memory as malloc header. NMT memory usage is also tracked by NMT.

The confusing part of this quote is the fact that it’s written in detail mode explanation, and I cannot tell if it’s a generic statement for both modes or just the latter.

The much better explanation you can find in this Stack Overflow response by Andrei Pangin:

The overhead of Native Memory Tracking obviously depends on how often the application allocates native memory. Usually, this is not something too frequent in a Java application, but cases may differ. Since you’ve already tried and didn’t notice performance difference, your application is apparently not an exception.

In the

summarymode, Native Memory Tracking roughly does the following things:

- increases every

mallocrequest in the JVM by 2 machine words (16 bytes);- records the allocation size and flags in these 2 words;

- atomically increments (or decrements on

free) the counter corresponding to the given memory type;- besides

mallocandfree, it also handles changes in virtual memory reservation and allocations of new arenas, but these are even less frequent thanmalloc/freecalls.So, to me, the overhead is quite small; 5-10% is definitely a large overestimation (the numbers would make sense for

detailmode which collects and stores stack traces, which is expensive, butsummarydoesn’t do that). When many threads concurrently allocate/free native memory, the update of an atomic counter could become a bottleneck, but again, that’s more like an extreme case. In short, if you measured a real application and didn’t notice any degradation, you’re likely safe to enable NMTsummaryin production.

The most important question is: can I run NMT in production? From my experience: most likely yes, in summary mode. We also need to mind circumstances when such a feature is needed - when we trace strange memory consumption. It’s a really hard job, so we should use all the tools we can to find the root cause of the problem.

Usage - summary

To enable NMT in summary mode you need to start JVM with -XX:NativeMemoryTracking=summary. After that whenever we want to understand why JVM allocated some native memory we can run:

jcmd <pid> VM.native_memory summary scale=MB

Sample output:

Native Memory Tracking:

(Omitting categories weighting less than 1MB)

Total: reserved=2340MB, committed=1237MB

- Java Heap (reserved=1024MB, committed=1024MB)

(mmap: reserved=1024MB, committed=1024MB)

- Class (reserved=1025MB, committed=11MB)

(classes #16326)

( instance classes #15180, array classes #1146)

(malloc=1MB #31555)

(mmap: reserved=1024MB, committed=10MB)

( Metadata: )

( reserved=64MB, committed=56MB)

( used=55MB)

( waste=0MB =0,63%)

( Class space:)

( reserved=1024MB, committed=10MB)

( used=10MB)

( waste=0MB =2,88%)

- Thread (reserved=50MB, committed=4MB)

(thread #51)

(stack: reserved=50MB, committed=4MB)

- Code (reserved=49MB, committed=15MB)

(malloc=1MB #7383)

(mmap: reserved=48MB, committed=14MB)

- GC (reserved=90MB, committed=90MB)

(malloc=19MB #9300)

(mmap: reserved=70MB, committed=70MB)

- Symbol (reserved=17MB, committed=17MB)

(malloc=15MB #401954)

(arena=1MB #1)

- Native Memory Tracking (reserved=7MB, committed=7MB)

(tracking overhead=7MB)

- Shared class space (reserved=12MB, committed=12MB)

(mmap: reserved=12MB, committed=12MB)

- Arena Chunk (reserved=1MB, committed=1MB)

(malloc=1MB)

- Metaspace (reserved=64MB, committed=56MB)

(mmap: reserved=64MB, committed=56MB)

Usage - diff

If we want to understand which NMT category is increasing we don’t need to compare two outputs like the one above. NMT has built-in diff operation. First, we need to set a baseline with:

jcmd <pid> VM.native_memory baseline

After some time (when we see that more memory was consumed by the Java process) we can run:

jcmd <pid> VM.native_memory summary.diff scale=MB

Sample output:

Native Memory Tracking:

(Omitting categories weighting less than 1MB)

Total: reserved=3350MB +1004MB, committed=2257MB +1013MB

- Java Heap (reserved=1024MB, committed=1024MB)

(mmap: reserved=1024MB, committed=1024MB)

- Class (reserved=1026MB, committed=12MB)

(classes #16935 +7)

( instance classes #15732 +4, array classes #1203 +3)

(malloc=2MB #34724 +2040)

(mmap: reserved=1024MB, committed=10MB)

: ( Metadata)

( reserved=64MB, committed=58MB)

( used=57MB)

( waste=0MB =0,58%)

: ( Class space)

( reserved=1024MB, committed=10MB)

( used=10MB)

( waste=0MB =2,82%)

- Thread (reserved=48MB -4MB, committed=4MB)

(thread #0)

(stack: reserved=48MB -4MB, committed=4MB)

- Code (reserved=50MB, committed=21MB +6MB)

(malloc=1MB #10044 +2426)

(mmap: reserved=48MB, committed=20MB +6MB)

- GC (reserved=90MB, committed=90MB)

(malloc=19MB #13489 +3620)

(mmap: reserved=70MB, committed=70MB)

- Other (reserved=1011MB +1007MB, committed=1011MB +1007MB)

(malloc=1011MB +1007MB #1025 +1008)

- Symbol (reserved=17MB, committed=17MB)

(malloc=16MB #412735 +275)

(arena=1MB #1)

- Native Memory Tracking (reserved=7MB, committed=7MB)

(tracking overhead=7MB)

- Shared class space (reserved=12MB, committed=12MB)

(mmap: reserved=12MB, committed=12MB)

- Metaspace (reserved=64MB, committed=58MB)

(mmap: reserved=64MB, committed=58MB)

First, we can look at information in line starting with Total where we can see +1013MB. It means that our JVM allocated almost 1GB of native memory since we ran the baseline command.

When we browse all the categories we can see this one:

- Other (reserved=1011MB +1007MB, committed=1011MB +1007MB)

(malloc=1011MB +1007MB #1025 +1008)

NMT - categories

Let’s now go through those categories and try to understand what we can do if memory consumption is increasing there.

Java Heap- An increase in that category means that your initial or minimal heap size is different from the max one and the heap simply grows.Class- New classes were loaded in the runtime. Depending on the volume of consumed memory it can be the natural behavior of the application. Many libraries define/load classes in the runtime. If the increase here is abnormal you can try to profile where new classes are loaded with Async-profiler.Thread- In most configurations, every Java thread can use up to 1MB (-Xss). Increasing consumption in that category usually means:- that existing threads needed more memory - for example, it reached code with deep stack

- new threads were created - you can find places that create new threads with Async-profiler.

Code- This is a space for JIT, it can grow up to ~240MB (-XX:ReservedCodeCacheSize). Increasing consumption here is natural. The longer our JVM is running the more methods are compiled by JIT.Other- Here you can find a native memory allocated withByteBuffer.allocateDirect. If you want to understand why this category grows you can run Async-profiler in method mode to find invocations of theallocateDirectmethod, or you can analyze the heap dump. You can also find here other allocations done withUnsafe.allocateMemorymethod.

Those are the most common scenarios I approached in my career. Mind that JVM can also have issues in its code that result in large memory consumption like JDK-8240723.

NMT vs RSS/PSS vs total memory allocated in the system

To move on I want to point out the difference between three metrics:

- NMT committed memory - how much memory JVM used, counted by JVM internals.

- RSS/PSS - how much memory the Java process used from an OS perspective.

- The total memory allocated in the system - this one is straightforward, it counts not only the Java process but everything system-wide.

Today many of the new applications written in Java are run in Docker containers so we would expect those three values to be the same. Well, the reality is a bit different.

Native libs

When you run your Java code you are allowed to invoke native code with JNI (and other mechanisms, but that’s irrelevant). Additionally, JDK can invoke native libraries via JNI

(as en example you can look at the source code of java.util.zip.Inflater class, a lot of native methods).

The code inside the external native library is out of JVM’s control.

That means that if our native code does malloc, JVM doesn’t know about that. This basically means that NMT cannot trace such an allocation. Remember that you don’t need to invoke JNI by

yourself, your dependencies can do it for you. A lot of libraries do that. If your code or your dependency code has a native memory leak done inside the JNI section RSS/PSS can grow while NMT

committed memory will stay the same.

To track down native memory leaks (no matter if it is inside JVM or native libs) we need to find allocated memory that hasn’t been freed. For many years there was no tool that could do it and give you Java stacktraces. In the past, we could use Async-profiler and trace:

page-faultsmallocinvocationsmprotectinvocations

But all we could get from it was where memory was allocated. We didn’t know which parts of the allocated memory were freed later. For all those years my approach to finding native memory leaks was as follows:

- Check JMX metrics (either in Grafana/Zabbix/… or by manual JMX connection) to see what JVM reports for classloading and direct buffer allocations.

- If there was no visible leak - turn on NMT in summary mode and pray that the answer is there.

- If there was no visible leak in NMT - profile for

page-faultsand filter manually every allocation that is tracked by NMT - For every native allocation found while RSS/PSS was increasing:

- Filter manually every allocation that is tracked by NMT

- Run such a code in isolation.

- Check if RSS/PSS increases.

Current “microservices” can have easily tens (and even more than a hundred) of different places where native memory is allocated. That was really time-consuming work, and from time to time there was no native memory leak after all - we will get to that later.

Thankfully those dark times are over. There is a new mode in the Async-profiler called nativemem. It keeps track of allocations and frees and can show us which memory hasn’t been freed during profiling.

That mode literally can save you hours of your life with investigation. This new mode is available in the 4.0 release. Let’s see the difference between nativemem and previous approaches.

I have an application with two endpoints:

@RestController

@RequestMapping("/examples/nativemem")

@RequiredArgsConstructor

class NativeMemController {

private final NativeMemService nativeMemService;

@GetMapping("/leak")

String getWithLeak() {

nativeMemService.createNewBuffer(true);

return "OK";

}

@GetMapping("/no-leak")

String getNoLeak() {

nativeMemService.createNewBuffer(false);

return "OK";

}

}

class NativeMemService {

private final List<ByteBuffer> buffers = new ArrayList<>();

void createNewBuffer(boolean leak) {

ByteBuffer direct = ByteBuffer.allocateDirect(1024 * 1024);

if (leak) {

buffers.add(direct);

}

}

}

A call to /leak endpoint will create 1 MB of native memory leak, a call to /no-leak endpoint will allocate 1 MB of native memory that should be freed by GC.

Here is a full script that I did:

#Warm-up

ab -n 300 http://localhost:8081/examples/nativemem/leak

ab -n 300 http://localhost:8081/examples/nativemem/no-leak

./asprof -e nativemem -f nativemem.jfr start 11817

ab -n 300 http://localhost:8081/examples/nativemem/leak

ab -n 300 http://localhost:8081/examples/nativemem/no-leak

jcmd 11817 GC.run

./asprof stop 11817

./jfrconv --total --nativemem --leak nativemem.jfr nativemem.html

./asprof -e page-faults -f faults.jfr start 11817

ab -n 300 http://localhost:8081/examples/nativemem/leak

ab -n 300 http://localhost:8081/examples/nativemem/no-leak

jcmd 11817 GC.run

./asprof stop 11817

./jfrconv faults.jfr faults.html

./asprof -e mprotect -f mprotect.jfr start 11817

ab -n 300 http://localhost:8081/examples/nativemem/leak

ab -n 300 http://localhost:8081/examples/nativemem/no-leak

jcmd 11817 GC.run

./asprof stop 11817

./jfrconv mprotect.jfr mprotect.html

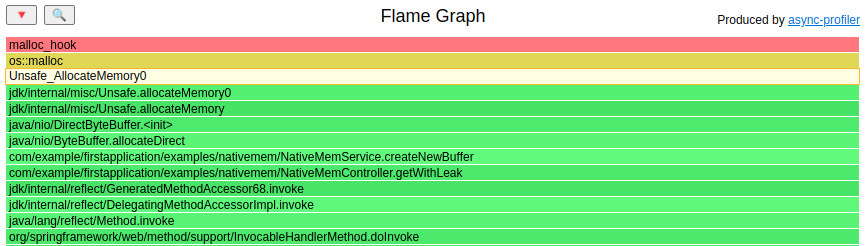

nativemem flame graph shows the correct code to blame:

Since we used the

Since we used the --total option with jfrconv it also shows that we leaked 300 MB:

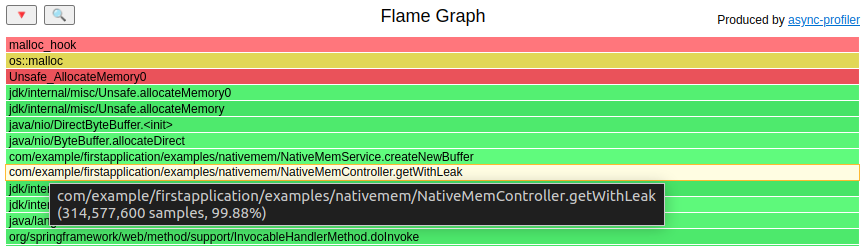

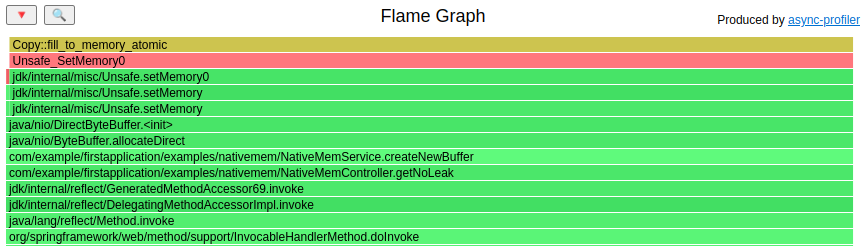

mprotect flame graph shows both allocations, leaky and non-leaky. In the real world, you can easily get one leaky and one hundred non-leaky:

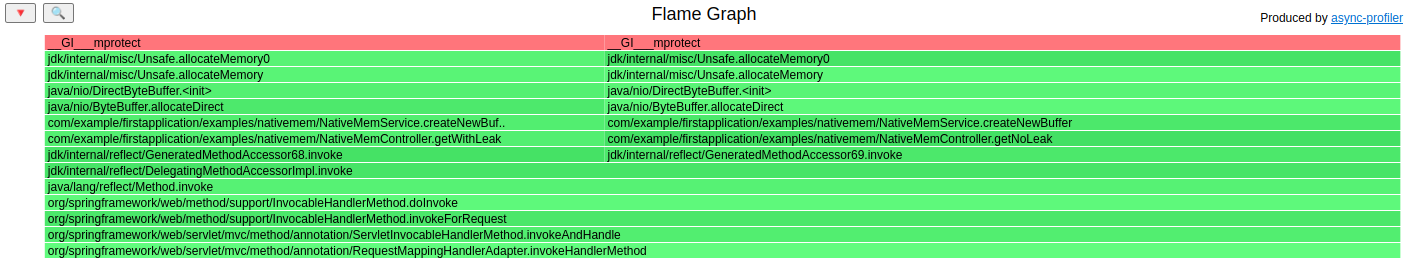

page-faults is the worst in that test, it blames the wrong endpoint and doesn’t show the proper allocation path in the flame graph:

Malloc

If you’ve tried to find a native memory leak with no results … well, there are still some other strange cases to consider. In this article, I cover only the most popular that I’ve seen many times.

This strange case is malloc from GLIBC causing strange memory consumption. There are many articles on that topic in the internet. Here is

one of them from Ruby. Mind that malloc an is allocator used by many languages, so if you do your search

on that topic don’t narrow it to Java only.

Let’s consider a program:

class ByteBufferFragmentationExample {

public static void main(String[] args) throws InterruptedException {

Thread.sleep(10_000);

for (int i = 0; i < 60; i++) {

new Thread(() -> {

Random random = new Random();

while (true) {

ByteBuffer.allocateDirect(random.nextInt(1024 * 1024));

}

}).start();

}

}

}

Let’s run it with -Xmx1G -Xms1G -XX:+AlwaysPreTouch -XX:NativeMemoryTracking=summary, so our heap is limited to 1 GB and allocated at the start of JVM. At the beginning, the RSS and PSS are:

$ smem -c "pid command rss pss" -ak -P "ByteBufferFragmentationExample"

PID Command RSS PSS

15753 /home/pasq/JDK/amazon-corretto-17.0.1.12.1-linux-x64 1.1G 1.1G

After some time:

PID Command RSS PSS

15474 /home/pasq/JDK/amazon-corretto-17.0.1.12.1-linux-x64 5.1G 5.1G

If we check the NMT output we will get:

jcmd ByteBufferFragmentationExample VM.native_memory summary scale=MB

15753:

Native Memory Tracking:

(Omitting categories weighting less than 1MB)

Total: reserved=3519MB, committed=2176MB

- Java Heap (reserved=1024MB, committed=1024MB)

(mmap: reserved=1024MB, committed=1024MB)

- Class (reserved=1024MB, committed=1MB)

(classes #2311)

( instance classes #2082, array classes #229)

(mmap: reserved=1024MB, committed=1MB)

( Metadata: )

( reserved=8MB, committed=5MB)

( used=5MB)

( waste=0MB =1,86%)

( Class space:)

( reserved=1024MB, committed=1MB)

( used=1MB)

( waste=0MB =13,09%)

- Thread (reserved=91MB, committed=8MB)

(thread #92)

(stack: reserved=91MB, committed=8MB)

- Code (reserved=242MB, committed=8MB)

(mmap: reserved=242MB, committed=7MB)

- GC (reserved=89MB, committed=89MB)

(malloc=19MB #4046)

(mmap: reserved=70MB, committed=70MB)

- Compiler (reserved=1MB, committed=1MB)

- Other (reserved=1024MB, committed=1024MB)

(malloc=1024MB #2048)

- Symbol (reserved=2MB, committed=2MB)

(malloc=1MB #22702)

(arena=1MB #1)

- Native Memory Tracking (reserved=1MB, committed=1MB)

(tracking overhead=1MB)

- Shared class space (reserved=12MB, committed=12MB)

(mmap: reserved=12MB, committed=12MB)

- Arena Chunk (reserved=1MB, committed=1MB)

(malloc=1MB)

- Metaspace (reserved=8MB, committed=5MB)

(mmap: reserved=8MB, committed=5MB)

Looks like Java only committed 2 GB of memory. There is no point in looking for a leak in our program, there is no leak. The result from allocateDirect method can be collected by GC

the on next run.

Nowadays, I see that problem most commonly when engineers decide to use an Amazon Linux, usually by fetching an Amazon Corretto docker image. This problem is not Amazon specific, in last few years I mostly profiled applications deployed on AWS. Such applications usually used Corretto docker images. Example Dockerfile for Amazon Linux starts with:

FROM amazoncorretto:21

My approach to that problem is to change allocator to jemalloc:

FROM amazoncorretto:21

RUN amazon-linux-extras install -y epel

RUN yum install -y jemalloc-devel

ENV LD_PRELOAD="/usr/lib64/libjemalloc.so"

Mind that if you’re using Alpine images/Linux you’re using musl libc, not GLIBC. I’ve never seen that issue with musl.