[Java][Profiling] Async-profiler - manual by use cases

This blog post contains examples of async-profiler usages that I have found helpful in my job. Some content of this post is copy-pasted from previous entries, as I just wanted to avoid unnecessary jumps between articles.

The goal of that post is to give examples. It’s not a replacement for the project README. I advise you to read it, as it tells you how to obtain async-profiler binaries and more.

All the examples that you are going to see here are synthetic reproductions of real-world problems that I solved during my career. Even if some examples look like “it’s too stupid to happen anywhere,” well, it isn’t.

That post will be maintained. Whenever I find a new use case that I think is worth sharing, I will update this post.

- Change log

- Acknowledgments

- Profiled application

- How to run an async-profiler

- Output formats

- Flame graphs

- Basic resources profiling

- Time to safepoint

- Methods profiling

- Native functions

- Perf events

- Native memory leaks

- Filtering single request

- Continuous profiling

- Contextual profiling

- Stability

- Overhead

- Random thoughts

Change log

- 2022-12-16 - Initial version

Acknowledgments

I would like to say thank you to Andrei Pangin (Lightrun) for all the work he did to create async-profiler and for his time and remarks on that article, Johannes Bechberger (SapMachine team at SAP) for all the work on making OpenJDK more stable with profilers, the input he gave me on overhead and stability, and the copy editing of this document, Marcin Grzejszczak (VMware) for great insight on how to integrate this profiler with Spring, Krystian Zybała for the review.

Profiled application

I’ve created a Spring Boot application for this post so that you can run the following examples on your own. It’s available on my GitHub. To build the application, do the following:

git clone https://github.com/krzysztofslusarski/async-profiler-demos

cd async-profiler-demos

mvn clean package

To run the application, you need three terminals where you run the following (you need the ports 8081, 8082, and 8083 available):

java -Xms1G -Xmx1G -XX:+AlwaysPreTouch \

-XX:+UnlockDiagnosticVMOptions -XX:+DebugNonSafepoints \

-Duser.language=en-US \

-Xlog:safepoint,gc+humongous=trace \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

java -Xms1G -Xmx1G -XX:+AlwaysPreTouch \

-jar second-application/target/second-application-0.0.1-SNAPSHOT.jar

java -Xms1G -Xmx1G -XX:+AlwaysPreTouch \

-jar third-application/target/third-application-0.0.1-SNAPSHOT.jar

I’m using Corretto 17.0.2:

$ java -version

openjdk version "17.0.2" 2022-01-18 LTS

OpenJDK Runtime Environment Corretto-17.0.2.8.1 (build 17.0.2+8-LTS)

OpenJDK 64-Bit Server VM Corretto-17.0.2.8.1 (build 17.0.2+8-LTS, mixed mode, sharing)

And to create simple load tests, I’m using an ancient tool:

$ ab -V

This is ApacheBench, Version 2.3 <$Revision: 1879490 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

I will not go through the source code to explain all the details before I jump into examples. I often profile applications with source code I haven’t seen in my work. Just consider it as a typical micro-service build with Spring Boot and Hibernate.

In all the examples, I will assume that the application is started with the java -jar command.

If you are running the application from the IDE, then the name of the application is switched

from first-application-0.0.1-SNAPSHOT.jar to FirstApplication.

All the JFRs generated while writing that post are available here.

How to run an Async-profiler

Command line

One of the easiest ways of running the async-profiler is using the command line. You just need to execute the following in the profiler folder:

./profiler.sh -e <event type> -d <duration in seconds> \

-f <output file name> <pid or application name>

# examples

./profiler.sh -e cpu -d 10 -f prof.jfr first-application-0.0.1-SNAPSHOT.jar

./profiler.sh -e wall -d 10 -f prof.html 1234 # where 1234 is the PID of the Java process

There are a lot of additional switches that are explained in the README.

You can also use async-profiler to output JFR files:

./profiler.sh start -e <event type> -f <output JFR file name> <pid or application name>

# do something

./profiler.sh stop -f <output JFR file name> <pid or application name>

For formats different from JFR, you need to pass the file name during stop, but for JFR

it is needed during start.

The profiler.sh script was removed in the 3.0 release, now we need to use asprof executable.

WARNING: This way of attaching any profiler to a JVM is vulnerable to JDK-8212155. That issue can crash your JVM during attachment. It has been fixed in JDK 17.

If you are attaching a profiler this way, it is recommended to use -XX:+UnlockDiagnosticVMOptions -XX:+DebugNonSafepoints

JVM flags (see this blog post by Jean-Philippe Bempel for more information on why these flags are essential).

During JVM startup

You can add a parameter when you are starting a java process:

java -agentpath:/path/to/libasyncProfiler.so=start,event=cpu,file=prof.jfr

The parameters passed this way differ from the switches used in the command line approach. You can find the list of parameters in the arguments.cpp file and the mapping between those in the profiler.sh file in the source code.

You can also attach a profiler without starting it using -agentpath, which is the safest way of starting your JVM

if you want to profile it anytime soon.

From Java API

The Java API is published to maven central. All you need to do is to include a dependency:

<dependency>

<groupId>tools.profiler</groupId>

<artifactId>async-profiler</artifactId>

<version>2.9</version>

</dependency>

That gives you an API where you can use Async-profiler from Java code. Example usage:

AsyncProfiler profiler = AsyncProfiler.getInstance();

That gives you an instance of AsyncProfiler object, with which you can send orders to the profiler:

profiler.execute(String.format("start,jfr,event=wall,file=%s.jfr", fileName));

// do something, like sleep

profiler.execute(String.format("stop,file=%s.jfr", fileName));

Since async-profiler 2.9, the AsyncProfiler.getInstance() extracts and loads the libasyncProfiler.so from the JAR.

In the previous version, this file needed to be in one of the following directories:

/usr/java/packages/lib/usr/lib64/lib64/lib/usr/lib

You can also point to any location of that file with API:

AsyncProfiler profiler = AsyncProfiler.getInstance("/path/to/libasyncProfiler.so");

From JMH benchmark

It’s worth mentioning that the async-profiler is supported in JMH benchmarks. If you have one, you just need to run the following:

java -jar benchmarks.jar -prof async:libPath=/path/to/libasyncProfiler.so\;output=jfr\;event=cpu

JMH will take care of every magic, and you get proper JFR files from async-profiler.

AP-Loader

There is a pretty new project called AP-Loader by Johannes Bechberger that can also be helpful to you. This project packages all native distributions into a single JAR, so It is convenient when deploying on different CPU architectures. You can also use the Java API with this loader without caring where the binary of the profiler is located and which platform you’re running on. I recommend reading the README of that project. It may be suitable for you.

IntelliJ Idea Ultimate

If you are using IntelliJ Idea Ultimate, you have a built-in async-profiler at your fingertips. You can profile any JVM running on your machine and visualize the results. Honestly, I don’t use it that much. Most of the time, I run profilers on remote machines, and I’ve got used to it, so I run it the same way on my localhost.

Output formats

Async-profiler gives you a choice of how the results should be saved:

- default - printing results to the terminal

- JFR

- Collapsed stack

- Flame graphs

- …

From that list, I choose JFR 95% of the time. It’s a binary format containing all the information gathered by the profiler. That file can be post-processed later by some external tool. I’m using my

own open-sourced JVM profiling toolkit,

which can read JFR files with additional filters and gives me the possibility to add/remove additional

levels during the conversion to a flame graph. I will use the filter names of my viewer in the following and the names of filters from my viewer.

There are other products (including the jfr2flame converter that is a part of async-profiler)

that can visualize the JFR output, but you should use the tool that works

for you. None worked for me, so I wrote my own, but it doesn’t mean it is the best choice for everybody.

All flame graphs in this post are generated by my tool from JFR files. The JFR file format for each sample contains the following:

- Stack trace

- Java thread name

- Thread state (is the Java thread consumes CPU)

- Timestamp

- Monitor class - for

lockmode - Waiting for lock duration - for

lockmode - Allocated object class - for

allocmode - Allocated object size - for

allocmode - Context ID - if you are using async-profiler with Context ID PR merged

So all the information is already there. We just need to extract what we need and present it visually.

Flame graphs

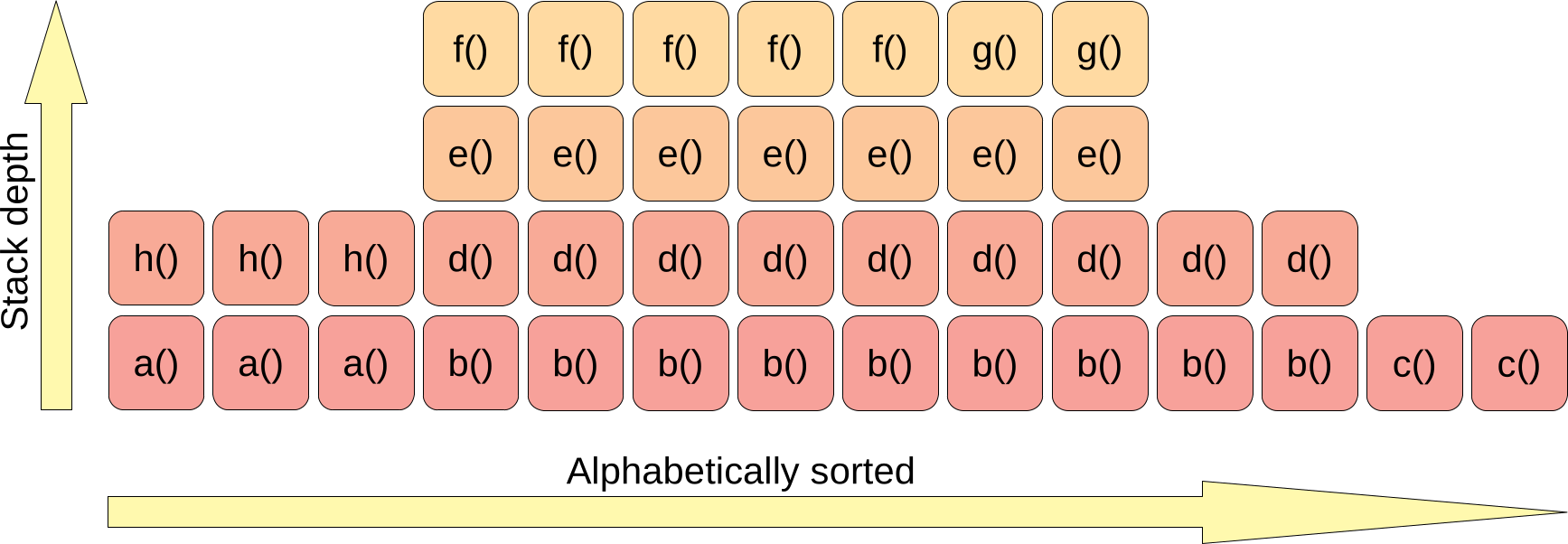

If you do sampling profiling you need to visualize the results. The results are nothing more than a set of stack traces. My favorite visualization is a flame graph. The easiest way to understand what flame graphs are is to know how they are created.

First, we draw a rectangle for each frame of each stack trace. The stack traces are drawn bottom-up and sorted alphabetically. For example, such a graph looks like this:

This corresponds to the following set of stack traces:

- 3 samples -

a() -> h() - 5 samples -

b() -> d() -> e() -> f() - 2 samples -

b() -> d() -> e() -> g() - 2 samples -

b() -> d() - 2 samples -

c()

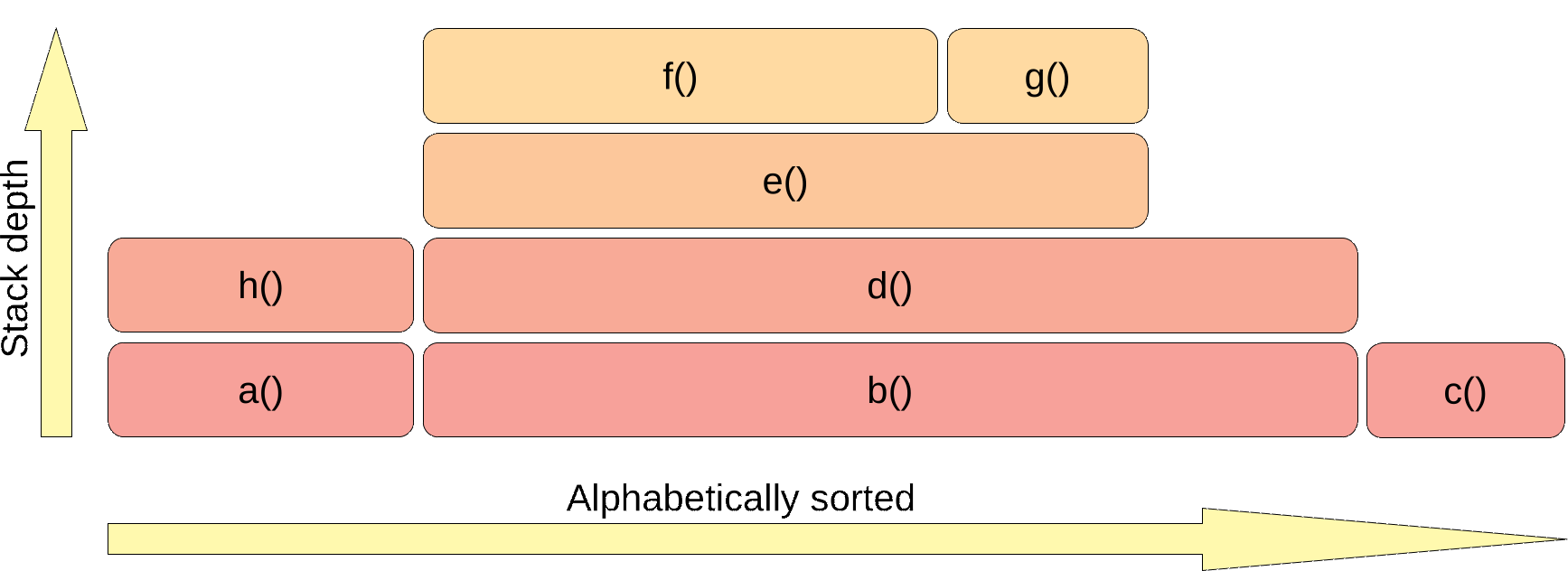

The next step is joining the rectangles with the same method name to one bar:

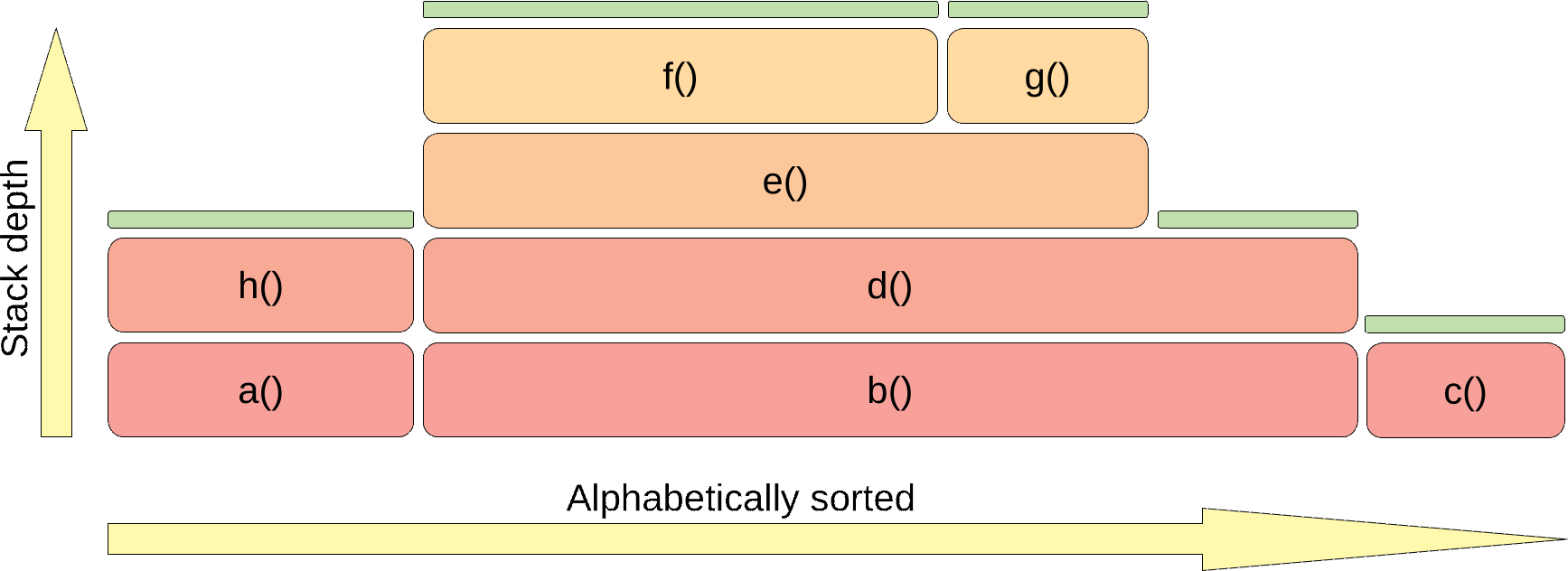

The flame graph usually shows you how your application utilizes a specific resource. The resource is utilized by the top methods of that graph (visualized with green bar):

So in this example, method b() does not utilize the resource. It just invokes methods that transitively use it. Flame graphs

are commonly used for the CPU utilization, but the CPU is just one of the resources we can visualize this way.

If you use wall-clock mode, your resource is time. If you use allocation mode, then your resource is

heap. If you want to learn more about flame graphs, you can check

Brendan’s Gregg video, he invented flame graphs.

Basic resources profiling

Before you start any profiler, the first thing you need to know is what your goal is. Only after that can you choose the proper mode of async-profiler. Let’s start with the basics.

Wall-clock

If your goal is to optimize time, you should run the async-profiler in wall-clock mode. This is the most common mistake made by engineers starting their journey with profilers. The majority of applications that I profiled so far were applications that were working with a distributed architecture, using some DBs, MQ, Kafka, … In such applications, the majority of time is spent on IO - waiting for other services/DB/… to respond. During such actions, Java is not using the CPU.

# warmup

ab -n 100 -c 4 http://localhost:8081/examples/wall/first

ab -n 100 -c 4 http://localhost:8081/examples/wall/second

# profiling of the first request

./profiler.sh start -e cpu -f first-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100 -c 4 http://localhost:8081/examples/wall/first

./profiler.sh stop -f first-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

./profiler.sh start -e wall -f first-wall.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100 -c 4 http://localhost:8081/examples/wall/first

./profiler.sh stop -f first-wall.jfr first-application-0.0.1-SNAPSHOT.jar

# profiling of the second request

./profiler.sh start -e cpu -f second-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100 -c 4 http://localhost:8081/examples/wall/second

./profiler.sh stop -f second-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

./profiler.sh start -e wall -f second-wall.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100 -c 4 http://localhost:8081/examples/wall/second

./profiler.sh stop -f second-wall.jfr first-application-0.0.1-SNAPSHOT.jar

In the ab output, we can see that the basic stats are similar for each request:

# first request

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 0

Processing: 521 654 217.9 528 1055

Waiting: 521 654 217.9 528 1054

Total: 521 654 217.9 528 1055

# second request

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.1 0 1

Processing: 522 665 190.2 548 1028

Waiting: 522 665 190.1 548 1028

Total: 522 665 190.2 549 1029

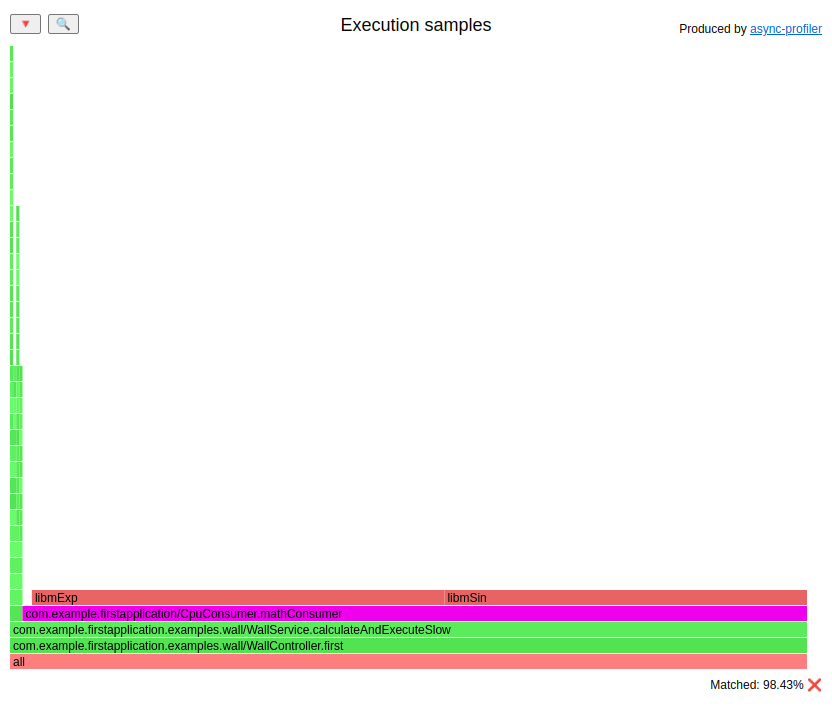

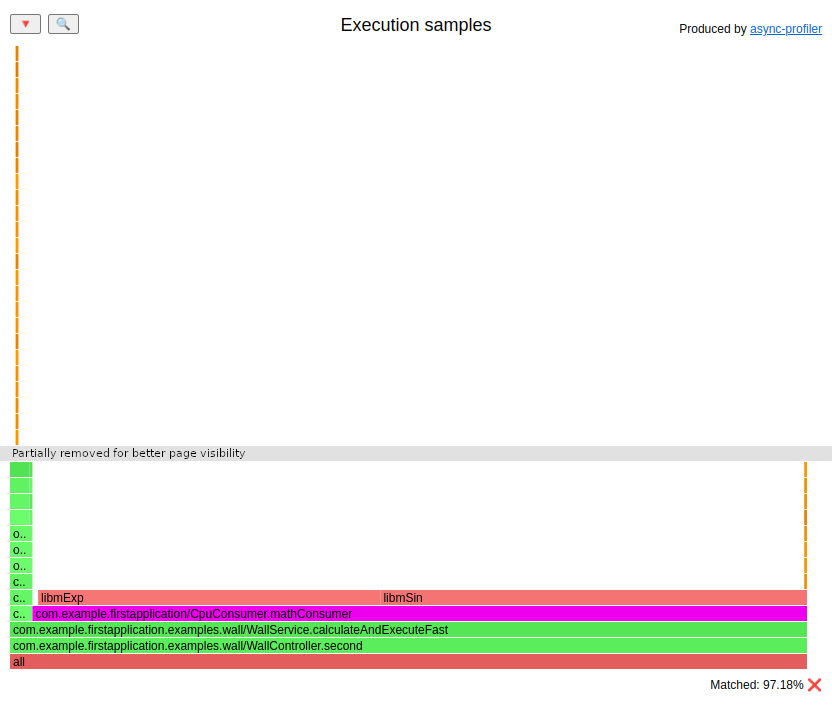

To give you a taste of how the CPU profile can mislead you, here are flame graphs for those two executions in CPU mode.

First execution: (HTML)

Second execution: (HTML)

I agree that they are not identical. But they have one thing in common.

They show that the most CPU-consuming method invoked by my controller is CpuConsumer.mathConsumer().

It is not a lie: It consumes CPU. But does it consume most of the time of the request?

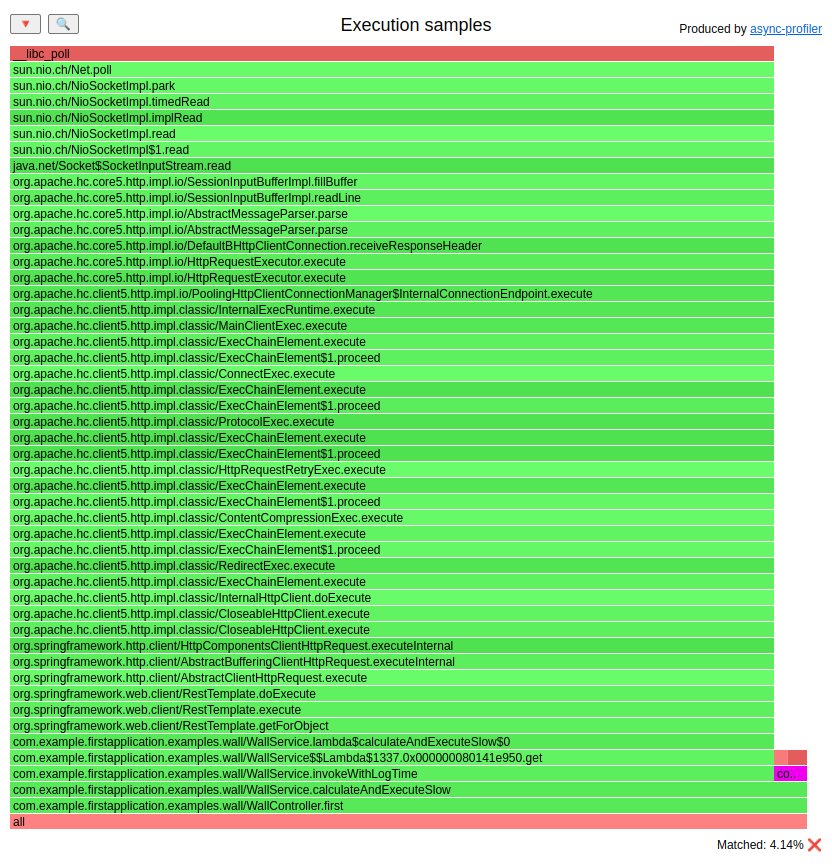

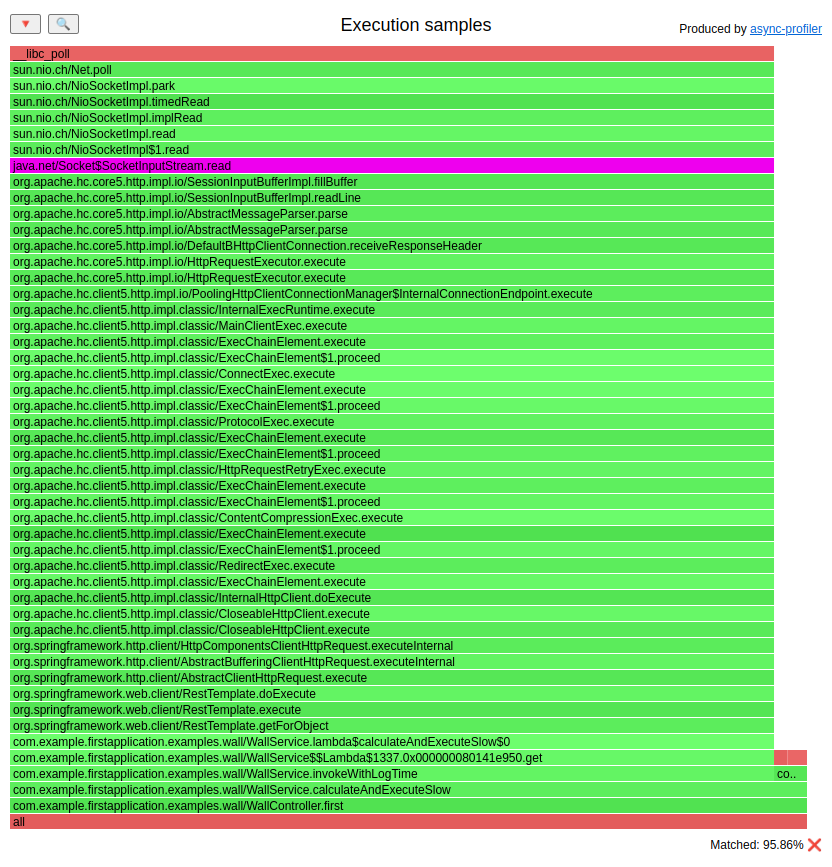

Look at the flame graphs in wall-clock mode:

First execution: (HTML)

Second execution: (HTML)

I highlighted the ```CpuConsumer.mathConsumer()`` method. Wall-clock mode shows us that this method is responsible just for ~4% of execution time.

Thing to remember: if your goal is to optimize time, and you use external systems (including DBs, queues, topics, microservices) or locks, sleeps, disk IO, It would help if you started with wall-clock mode.

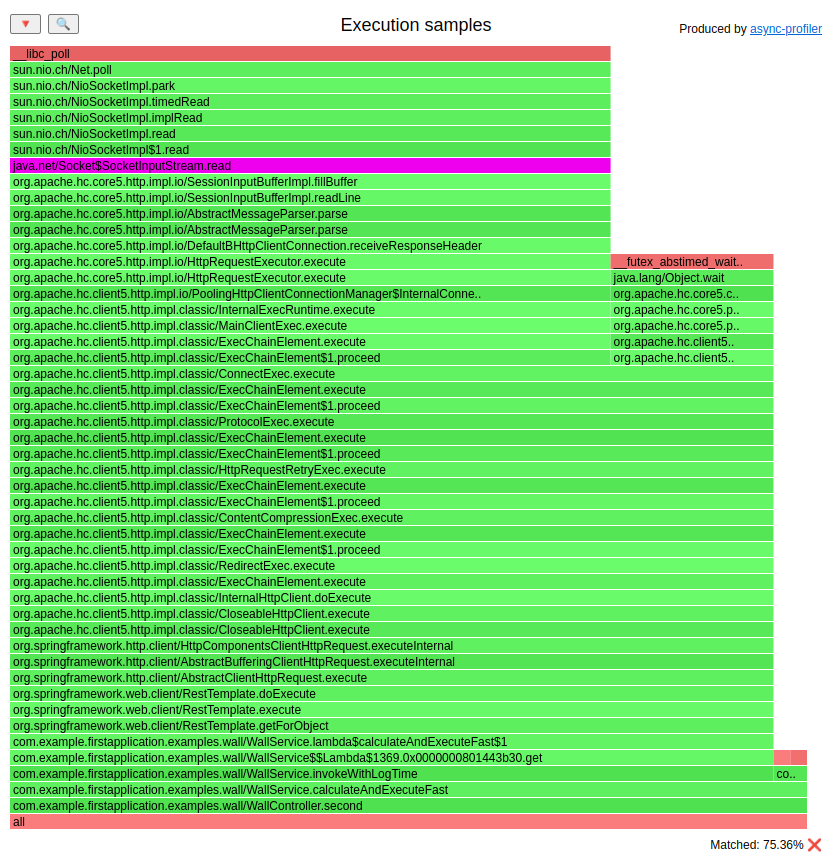

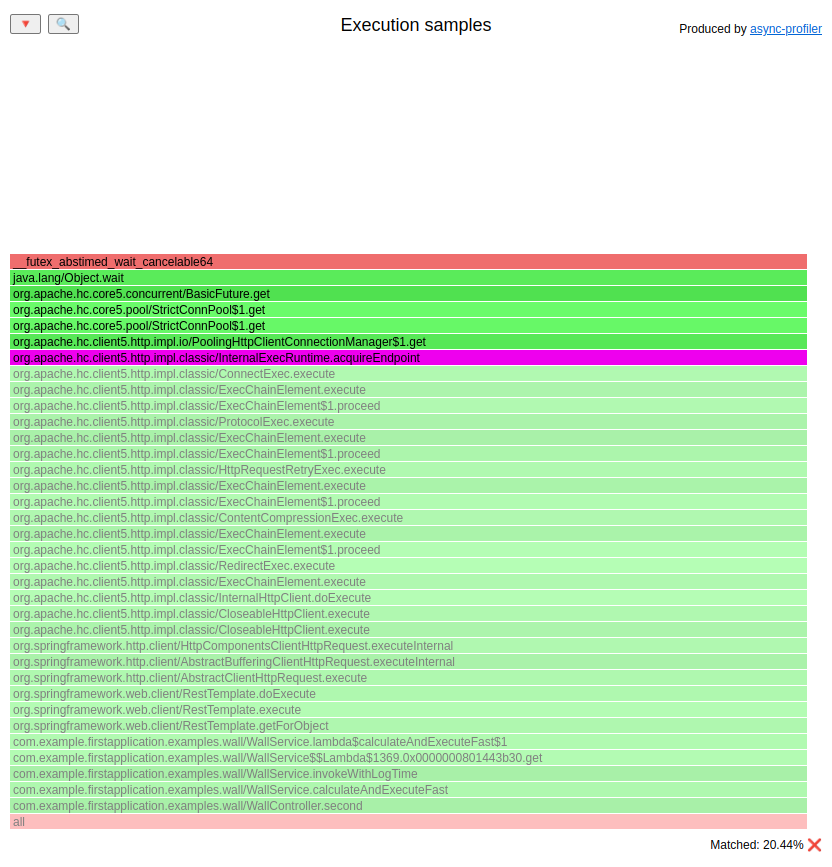

In wall-clock mode, we can also see that these flame graphs differ. The first

execution spends most of its time in SocketInputStream.read():

Over 95% of the time is consumed there. But the second execution:

spends just 75% on the socket. To the right of the method

SocketInputStream.read() you can spot an additional bar. Let’s zoom in:

It’s the InternalExecRuntime.acquireEndpoint() method, which executes

PoolingHttpClientConnectionManager$1.get() from Apache HTTP Client, which

in the end executes Object.wait(). What does it do? Basically, what we are trying

to do in those two executions is to invoke a remote REST service. The first execution

uses an HTTP Client instance with 20 available connections, so no thread

needs to wait for a connection from the pool:

// FirstApplicationConfiguration

@Bean("pool20RestTemplate")

RestTemplate pool20RestTemplate() {

return createRestTemplate(20);

}

// WallService

void calculateAndExecuteSlow() {

Random random = ThreadLocalRandom.current();

CpuConsumer.mathConsumer(random.nextDouble(), CPU_MATH_ITERATIONS);

invokeWithLogTime(() ->

pool20RestTemplate.getForObject(SECOND_APPLICATION_URL +

"/examples/wall/slow", String.class)

);

}

The time spent on a socket is entirely spent waiting for the REST endpoint to respond.

The second execution uses a different instance of RestTemplate that has just

3 connections in the pool. Since the load is generated from 4 threads

by the ab, one thread must wait for a connection from the pool.

You may think this is a stupid human error, that someone created a pool without enough

connections. In the real world, the problem is with defaults that

are quite low. In our testing application, the default settings for the thread pool are:

maxTotal- 25 connections totally in the pooldefaultMaxPerRoute- 5 connections to the same address

That number varies between versions. I remember one application with HTTP Client 4.x with defaults set to 2.

There are plenty of tools that log the invocation time of external services. The common

problem in those tools is that the time waiting on the pool for a connection is usually

included in the invocation time, which is a lie. I saw this in the past when the caller

had a line in the logs that gave an execution time of X ms; the callee had a similar log

that presented 1/10 * X ms. What were those teams doing to understand that? They

tried to convince the network department that this was a network issue. Big waste of time.

I also saw plenty of custom logic that traced external execution time. In our application you can see such a pattern:

void calculateAndExecuteFast() {

// ...

invokeWithLogTime(() ->

pool3RestTemplate.getForObject(SECOND_APPLICATION_URL + "/examples/wall/fast", String.class)

);

}

private <T> T invokeWithLogTime(Supplier<T> toInvoke) {

StopWatch stopWatch = new StopWatch();

stopWatch.start();

T ret = toInvoke.get();

stopWatch.stop();

log.info("External WS invoked in: {}ms", stopWatch.getTotalTimeMillis());

return ret;

}

The logs don’t trace the time using an external service; the time also includes all the magic done by Spring, including waiting for a connection from the pool. You can easily see that for the second request, the logs look like this:

External WS invoked in: 937ms

But the second service that is invoked is:

@GetMapping("/fast")

String fast() throws InterruptedException {

Thread.sleep(500);

return "OK";

}

Wall-clock - filtering

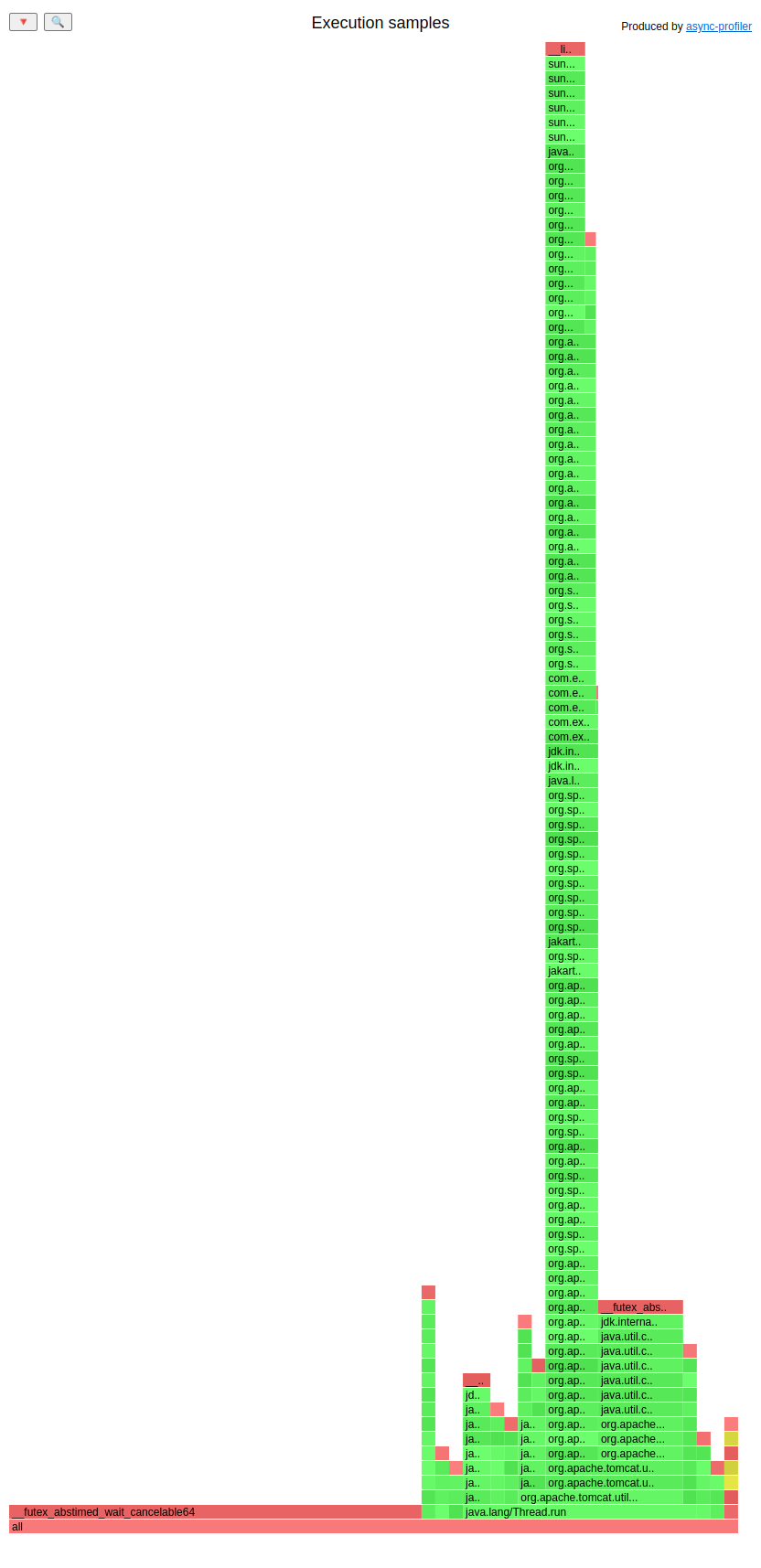

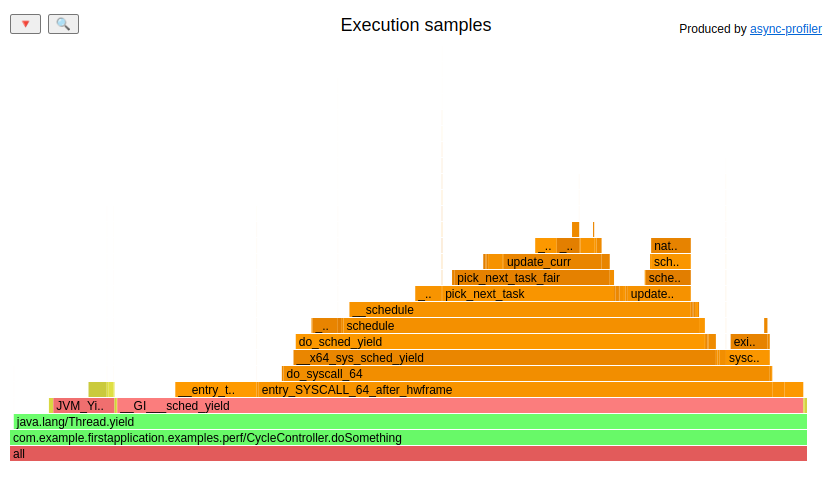

If you open the wall-clock flame graph for the first time, you may be confused, since it usually looks like this:

You see all the threads, even if they are sleeping or waiting in some queue for a job.

Wall-clock shows you all of them. Most of the time, you want to focus on the frames where

your application is doing something, not when it is waiting.

All you need to do is to filter the stack traces. If you are using Spring Boot

with the embedded Tomcat, you can filter

stack traces that contain the SocketProcessorBase.run method.

In my viewer, you can just paste it to stack trace filter, and you are done.

It’s just a matter of proper filtering if you want to focus on one controller, class, method, etc.

CPU - easy-peasy

If you know that your application is CPU intensive, or you want to decrease CPU consumption, then the CPU mode is suitable.

Let’s prepare our application:

# execute it once

curl -v http://localhost:8081/examples/cpu/prepare

# little warmup

ab -n 5 -c 1 http://localhost:8081/examples/cpu/inverse

# profiling time:

./profiler.sh start -e cpu -f cpu.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 5 -c 1 http://localhost:8081/examples/cpu/inverse

./profiler.sh stop -f cpu.jfr first-application-0.0.1-SNAPSHOT.jar

You can check during the benchmark what the CPU utilization of our JVM is:

$ pidstat -p `pgrep -f first-application-0.0.1-SNAPSHOT.jar` 5

09:42:49 UID PID %usr %system %guest %wait %CPU CPU Command

09:42:54 1000 49813 115,40 0,40 0,00 0,00 115,80 1 java

09:42:59 1000 49813 111,40 0,60 0,00 0,00 112,00 1 java

09:43:04 1000 49813 106,80 0,20 0,00 0,00 107,00 1 java

09:43:09 1000 49813 113,00 0,20 0,00 0,00 113,20 1 java

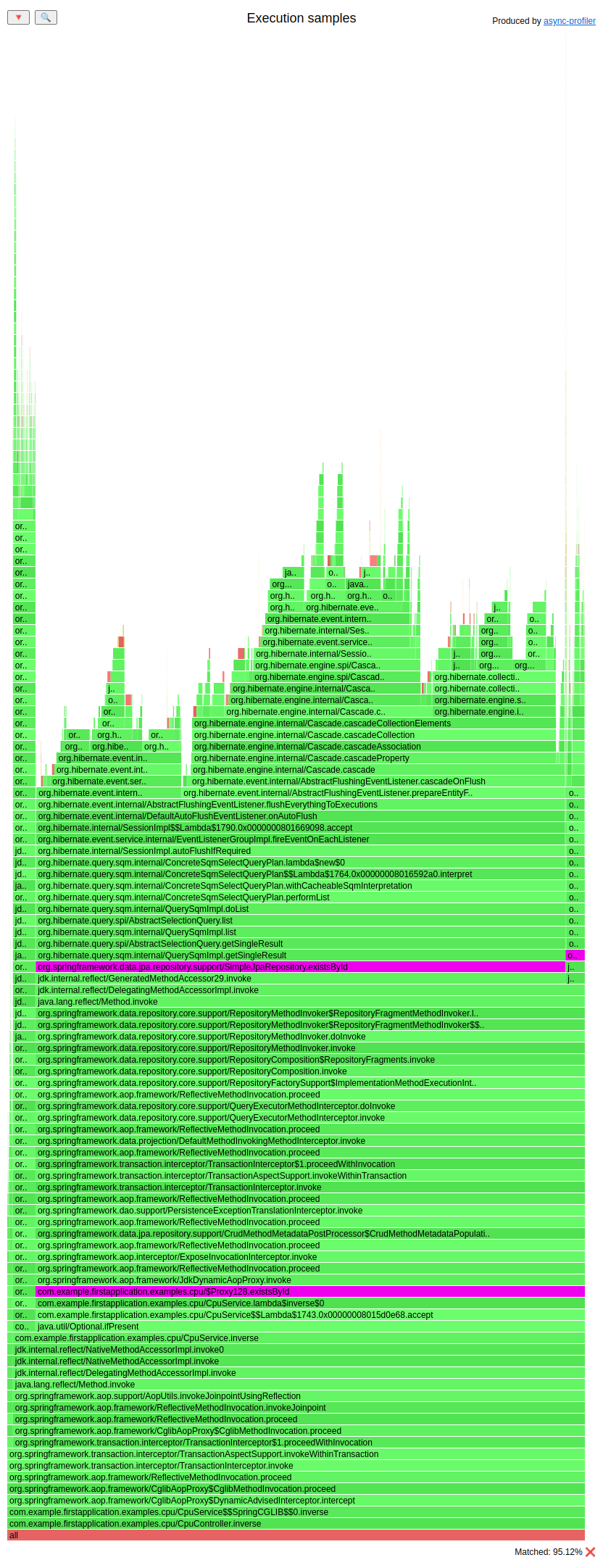

We are using a bit more than one CPU core. Our load generator creates the load with a single thread so that the CPU usage is pretty high. Let’s see what our CPU is doing while executing our spring controller: (HTML)

I know that flame graph is pretty large, but hey, welcome to Spring and Hibernate.

I highlighted the existsById() method. You can see that it consumes 95% of the

CPU time. But why? It doesn’t look scary at all when looking at the code:

@Transactional

public void inverse(UUID uuid) {

sampleEntityRepository.findById(uuid).ifPresent(sampleEntity -> {

boolean allConfigPresent = true;

for (int i = 0; i < 100; i++) {

allConfigPresent = allConfigPresent && sampleConfigurationRepository.existsById("key-" + i);

}

sampleEntity.setFlag(!sampleEntity.isFlag());

});

}

We are just executing existsById() on the Spring Data JPA repository. The answer

why that method is slow is at the beginning of the method:

sampleEntityRepository.findById(uuid)

and in JPA mapping:

public class SampleEntity {

@Id

private UUID id;

@Fetch(FetchMode.JOIN)

@JoinColumn(name = "fk_entity")

@OneToMany(cascade = CascadeType.ALL, fetch = FetchType.EAGER, orphanRemoval = true)

private Set<SampleSubEntity> subEntities;

private boolean flag;

}

This means that when we get one SampleEntity by id, we also extract the subEntities from the database because of fetch = FetchType.EAGER.

This is not a problem yet. All JPA entities are loaded into the Hibernate session.

That mechanism is pretty cool because it gives you the dirty checking functionality.

The downside, however, is that the dirty entities need to be flushed by Hibernate

to DB. You have different flush strategies in Hibernate. The default one is AUTO:

you can read about them in the

Javadocs.

What you see in the flame graph is exactly Hibernate looking for dirty entities that

should be flushed.

What can we do about this? Well, first of all, it should be forbidden to develop

a large Hibernate application without reading

Vlad Mihalcea’s book.

If you are developing such an application, buy that book, it’s great. From my experience,

some engineers tend to abuse Hibernate. Let’s look at the code sample I pasted

before. We are loading a huge SampleEntity. What are we doing with it?

@Transactional

public void inverse(UUID uuid) {

sampleEntityRepository.findById(uuid).ifPresent(sampleEntity -> {

// irrelevant

sampleEntity.setFlag(!sampleEntity.isFlag());

});

}

So we’re basically changing one column in one row in the DB. We can do it more efficiently

by using the update query, even with Spring Data JPA repository or simple JDBC.

But do we really need to use Hibernate everywhere?

CPU - a bit harder

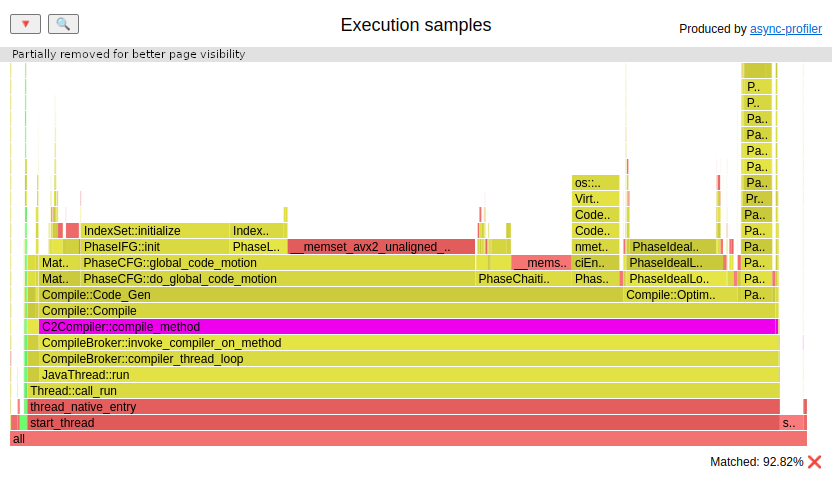

Sometimes the result of a CPU profiler is just the beginning of the fun. Let’s consider the following example:

# little warmup

ab -n 10 -c 1 http://localhost:8081/examples/cpu/matrix-slow

# profiling time

./profiler.sh start -e cpu -f matrix-slow.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 10 -c 1 http://localhost:8081/examples/cpu/matrix-slow

./profiler.sh stop -f matrix-slow.jfr first-application-0.0.1-SNAPSHOT.jar

# little warmup

ab -n 10 -c 1 http://localhost:8081/examples/cpu/matrix-fast

# profiling time

./profiler.sh start -e cpu -f matrix-fast.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 10 -c 1 http://localhost:8081/examples/cpu/matrix-fast

./profiler.sh stop -f matrix-fast.jfr first-application-0.0.1-SNAPSHOT.jar

Let’s see the run times of the matrix-slow request:

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 1601 1795 181.5 1785 2078

Waiting: 1600 1794 181.5 1785 2077

Total: 1601 1795 181.5 1786 2078

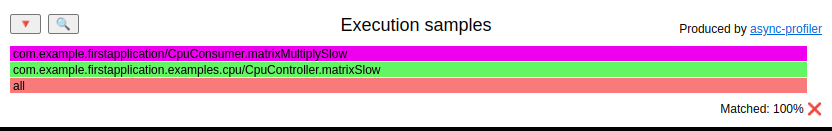

The profile looks like the following: (HTML)

The whole CPU is wasted in the matrixMultiplySlow method:

public static int[][] matrixMultiplySlow(int[][] a, int[][] b, int size) {

int[][] result = new int[size][size];

for (int i = 0; i < size; i++) {

for (int j = 0; j < size; j++) {

int sum = 0;

for (int k = 0; k < size; k++) {

sum += a[i][k] * b[k][j];

}

result[i][j] = sum;

}

}

return result;

}

If we look at the run times of the matrix-fast request:

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 107 114 6.5 114 128

Waiting: 106 113 6.5 113 128

Total: 107 114 6.5 114 128

That request is 18 times faster than the matrix-slow request, but if we look at the profile

(HTML)

We see that the whole CPU is wasted in the method matrixMultiplyFaster:

public static int[][] matrixMultiplyFaster(int[][] a, int[][] b, int size) {

int[][] result = new int[size][size];

for (int i = 0; i < size; i++) {

for (int k = 0; k < size; k++) {

int current = a[i][k];

for (int j = 0; j < size; j++) {

result[i][j] += current * b[k][j];

}

}

}

return result;

}

Both methods matrixMultiplySlow and matrixMultiplyFaster have the same complexity O(n^3). So

why is one faster than the other? Well, if you want to understand exactly how a CPU-intensive algorithm works,

you need to understand how the CPU works, which is far away from the topic of this post. Be aware that if

you want to optimize such algorithms; you will probably need at least one of the following:

- Knowledge of CPU architecture

- Top-Down performance analysis methodology

- Looking at the ASM of generated methods

Many Java programmers forget that all the execution is done on the CPU. Java needs to use ASM to run on the CPU. That’s basically what the JIT compiler does: It converts your hot methods and loops into optimized ASM. At the assembly level, you can check, for example, if the JIT used vectorized instruction for your loops. So yes, sometimes you need to get dirty with such low-level stuff. For now, async-profiler gives you a hint on which methods to focus.

We will return to this example in the Cache misses section.

Allocation

The most common use cases where the allocations are tracked are:

- decreasing GC run frequency

- finding allocations outside the TLAB, which are done in the slow path

- fighting with single/tens of milliseconds latency, where even the creation of heap objects matters

First, let’s understand how new objects on a heap are created so we have a better understanding of what the async-profiler shows us.

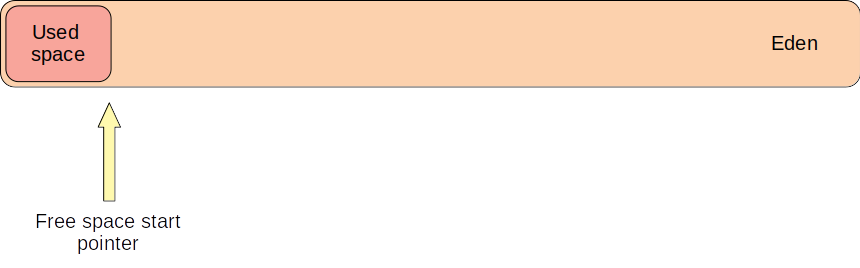

A portion of our Java heap is called an eden. This is a place where new objects are born. Let’s assume for simplicity that eden is a continuous slice of memory. The very efficient way of allocation in such a case is called bumping pointer. We keep a pointer to the first address of the free space:

When we do new Object(), we simply calculate its size, locate

the next free address and bump the pointer by sizeof(Object):

But there is one major problem with that technique: We have to synchronize the object allocation if we have more than one thread that can create new objects in parallel, but this is quite costly. We solve this by giving each thread a portion of eden dedicated to only that thread. This portion is called TLAB - thread-local allocation buffer. With this, each thread can use bumping pointer at its TLAB safely and in parallel.

Introducing TLABs creates two more issues that the JVM needs to deal with:

- a thread can allocate an object, but there is not enough space in its TLAB - the JVM creates a new TLAB if there is still space in eden

- a thread can allocate a big object, so it’s not optimal to use the TLAB mechanism - the JVM will use the slow path of the allocation that allocates the object directly in eden or in the old generation

What is important to us is that in both these cases, the JVM emits an event that a profiler can capture. That’s basically how async-profiler samples allocations:

- if the allocation of an object needed a new TLAB - we see an aqua frame for that

- if the allocation was done outside the TLAB - we see a brown frame

In real-world systems, the frequency of GC can be monitored by systems like Grafana or Zabbix. Here we have a synthetic application, so let’s measure the allocation size differently:

# little warmup

ab -n 2 -c 1 http://localhost:8081/examples/alloc/

# measuring the heap allocations of a request

jcmd first-application-0.0.1-SNAPSHOT.jar GC.run

jcmd first-application-0.0.1-SNAPSHOT.jar GC.heap_info

ab -n 10 -c 1 http://localhost:8081/examples/alloc/

jcmd first-application-0.0.1-SNAPSHOT.jar GC.heap_info

# profiling time

./profiler.sh start -e alloc -f alloc.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000 -c 1 http://localhost:8081/examples/alloc/

./profiler.sh stop -f alloc.jfr first-application-0.0.1-SNAPSHOT.jar

Let’s look at the output of the GC.heap_info command:

garbage-first heap total 1048576K, used 41926K [0x00000000c0000000, 0x0000000100000000)

region size 1024K, 2 young (2048K), 0 survivors (0K)

Metaspace used 67317K, committed 67904K, reserved 1114112K

class space used 9868K, committed 10176K, reserved 1048576K

garbage-first heap total 1048576K, used 110018K [0x00000000c0000000, 0x0000000100000000)

region size 1024K, 8 young (8192K), 0 survivors (0K)

Metaspace used 67317K, committed 67904K, reserved 1114112K

class space used 9868K, committed 10176K, reserved 1048576K

We executed alloc requests ten times, and our heap usage has increased from 41926K to 110018K.

So we are creating over 6MB of objects per request on the heap. If we look at the controller source code

it’s hard to justify that:

@RestController

@RequestMapping("/examples/alloc")

@RequiredArgsConstructor

class AllocController {

@GetMapping("/")

String get() {

return "OK";

}

}

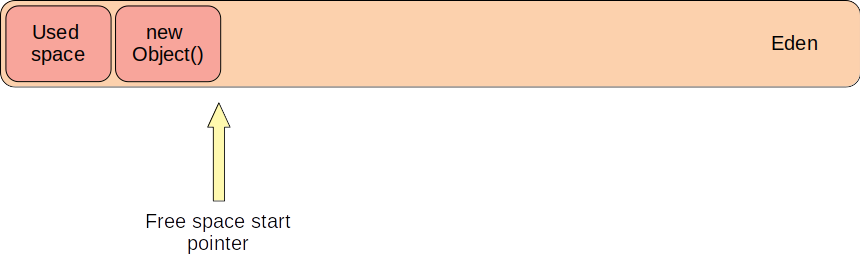

Let’s look at the allocation flame graph: (HTML)

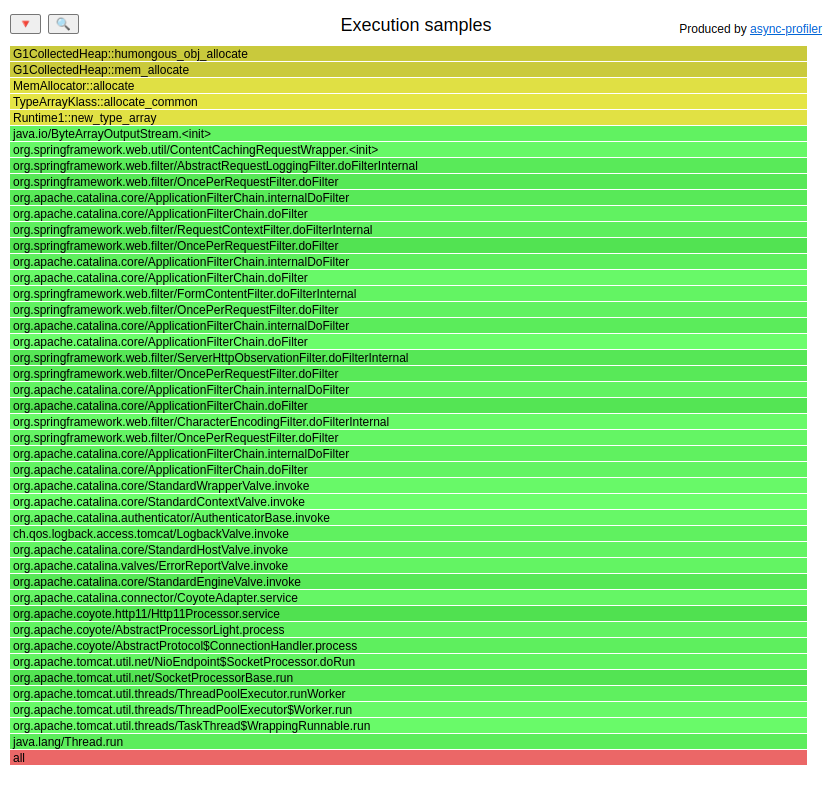

The class AbstractRequestLoggingFilter is responsible for over 99% of recorder allocations.

You can find its main creation sites using the techniques from the Methods profiling section; feel free to skip it for now.

Here is the answer:

@Bean

CommonsRequestLoggingFilter requestLoggingFilter() {

CommonsRequestLoggingFilter loggingFilter = new CommonsRequestLoggingFilter() {

@Override

protected boolean shouldNotFilter(HttpServletRequest request) throws ServletException {

return !request.getRequestURI().contains("alloc");

}

};

loggingFilter.setIncludeClientInfo(true);

loggingFilter.setIncludeQueryString(true);

loggingFilter.setIncludePayload(true);

loggingFilter.setMaxPayloadLength(5 * 1024 * 1024);

loggingFilter.setIncludeHeaders(true);

return loggingFilter;

}

I have seen such code many times; this CommonsRequestLoggingFilterclass helps you log

the REST endpoint communication. The setMaxPayloadLength() method sets the maximum number of bytes of the payload which are logged.

You can browse over the Spring source code to see that the implementation creates byte arrays of

such size in the constructor. No matter how big the payload is, we always create a 5MB array here.

The advice that I gave to users of that code was to create their own filter that would do the same job but allocate the array lazily.

Allocation - humongous objects

If you use the G1 garbage collector, JVM’s default since JDK 9, your heap is divided into regions. The region sizes vary from 1 MB to 32 MB depending on the heap size. The goal is to have no more than 2048 regions. You can check the region size for different heap sizes with the following:

java -Xms1G -Xmx1G -Xlog:gc,exit*=debug -version

The output contains a line containing the region size 1024K information.

If you are trying to allocate an object larger or equal to half of the region size, you are doing a humongous allocation. Long story short: It has been, and it is, painful. It is allocated directly in the old generation but is cleared during minor GCs. I saw situations where G1 GC needed to invoke a FullGC phase because of the humongous allocation. If you do much of this, G1 will also invoke more concurrent collections, which can waste your CPU.

While running the previous example, you could spot in first-application-0.0.1-SNAPSHOT.jar logs:

...

[70,149s][debug][gc,humongous] GC(34) Reclaimed humongous region 436 (object size 5242896 @ 0x00000000db400000)

[70,149s][debug][gc,humongous] GC(34) Reclaimed humongous region 442 (object size 5242896 @ 0x00000000dba00000)

[70,149s][debug][gc,humongous] GC(34) Reclaimed humongous region 448 (object size 5242896 @ 0x00000000dc000000)

[70,149s][debug][gc,humongous] GC(34) Reclaimed humongous region 454 (object size 5242896 @ 0x00000000dc600000)

...

These GC logs tell us that some humongous object of size 5242896 was reclaimed. The nice thing

about JFR files is that they also keep the size of sampled allocations. Using this, we should be able to find out

the stack trace that has created that object.

We don’t need sophisticated JFR viewers for that. We get the jfr command with any JDK distribution. Let’s use it:

$ jfr summary alloc.jfr

...

Event Type Count Size (bytes)

=========================================================

jdk.ObjectAllocationOutsideTLAB 1013 19220

jdk.ObjectAllocationInNewTLAB 359 6719

...

Let’s focus on allocations outside the TLAB; it is unlikely to allocate humongous objects in the TLAB.

$ jfr print --events jdk.ObjectAllocationOutsideTLAB --stack-depth 10 alloc.jfr

...

jdk.ObjectAllocationOutsideTLAB {

startTime = 2022-12-05T08:56:04.354766183Z

objectClass = byte[] (classLoader = null)

allocationSize = 5242896

eventThread = "http-nio-8081-exec-9" (javaThreadId = 49)

stackTrace = [

java.io.ByteArrayOutputStream.<init>(int) line: 81

org.springframework.web.util.ContentCachingRequestWrapper.<init>(HttpServletRequest, int) line: 90

org.springframework.web.filter.AbstractRequestLoggingFilter.doFilterInternal(HttpServletRequest, HttpServletResponse, FilterChain) line: 281

org.springframework.web.filter.OncePerRequestFilter.doFilter(ServletRequest, ServletResponse, FilterChain) line: 116

org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ServletRequest, ServletResponse) line: 185

org.apache.catalina.core.ApplicationFilterChain.doFilter(ServletRequest, ServletResponse) line: 158

org.springframework.web.filter.RequestContextFilter.doFilterInternal(HttpServletRequest, HttpServletResponse, FilterChain) line: 100

org.springframework.web.filter.OncePerRequestFilter.doFilter(ServletRequest, ServletResponse, FilterChain) line: 116

org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ServletRequest, ServletResponse) line: 185

org.apache.catalina.core.ApplicationFilterChain.doFilter(ServletRequest, ServletResponse) line: 158

...

]

}

...

We can easily match allocationSize = 5242896` with the object size from the GC logs, so we can find and eliminate humongous allocations using that technique. You can filter the allocation

JFR file for objects with a size larger or equal to half of our G1 region size. All of

these allocations are humongous allocations.

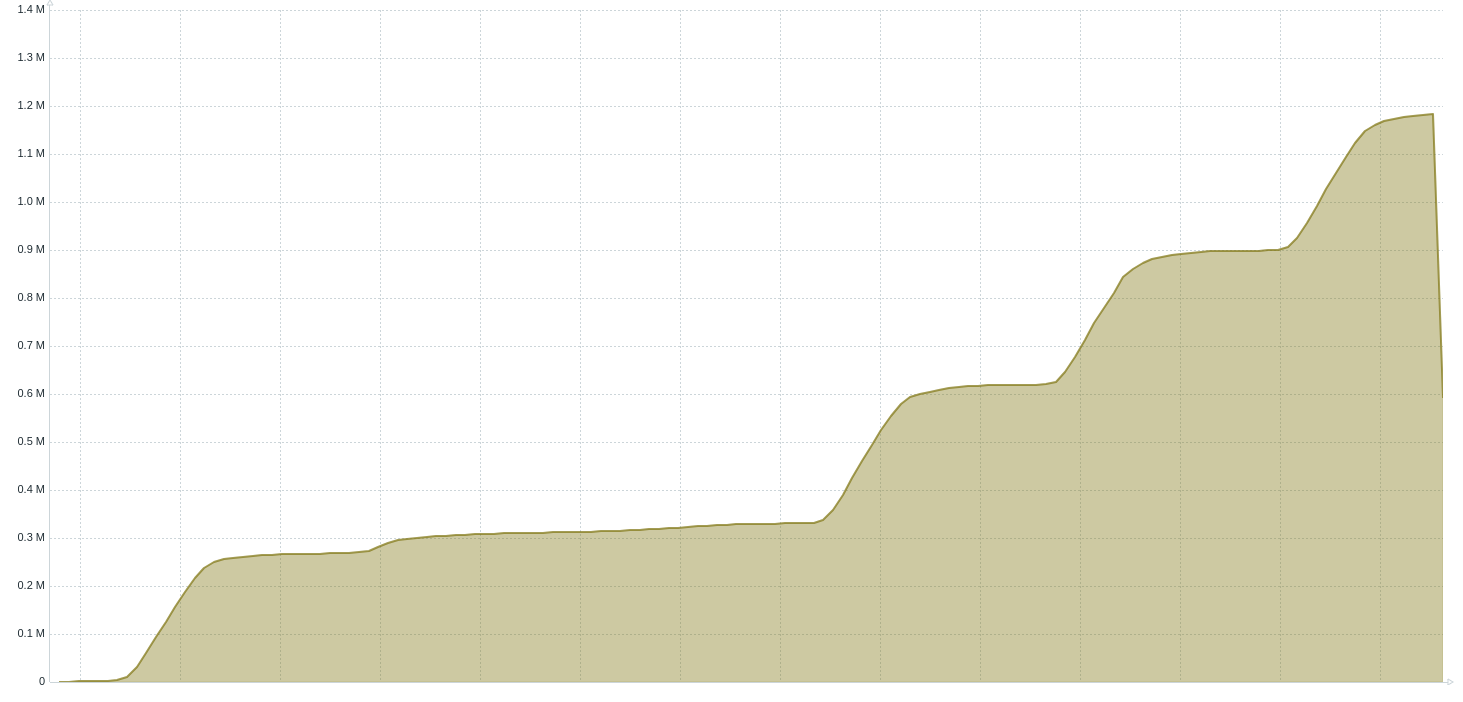

Allocation - live objects

Now on to memory leaks, which form the reason for tracking live object allocations:

A memory leak occurs when a Garbage Collector cannot collect Objects that are no longer needed by the Java application.

Memory leaks are one of the most common problems related to Java heaps; the other is

- not enough space on a heap - sometimes, a Java application may work fine with the heap it has, but there is the possibility to run a part of the application that needs more heap than specified via -Xmx

- a gray area between - these are cases when we allocate memory indefinitely, but our application needs these objects

How can we detect memory leaks? The GC emits the following kind of entry at the end of each GC cycle into the GC logs at info level:

GC(11536) Pause Young (Normal) (G1 Evacuation Pause) 6746M->2016M(8192M) 40.514ms

You can find three sizes in such an entry A->B(C) that are:

- A - used size of a heap before GC cycle

- B - used size of a heap after GC cycle

- C - the current size of a whole heap

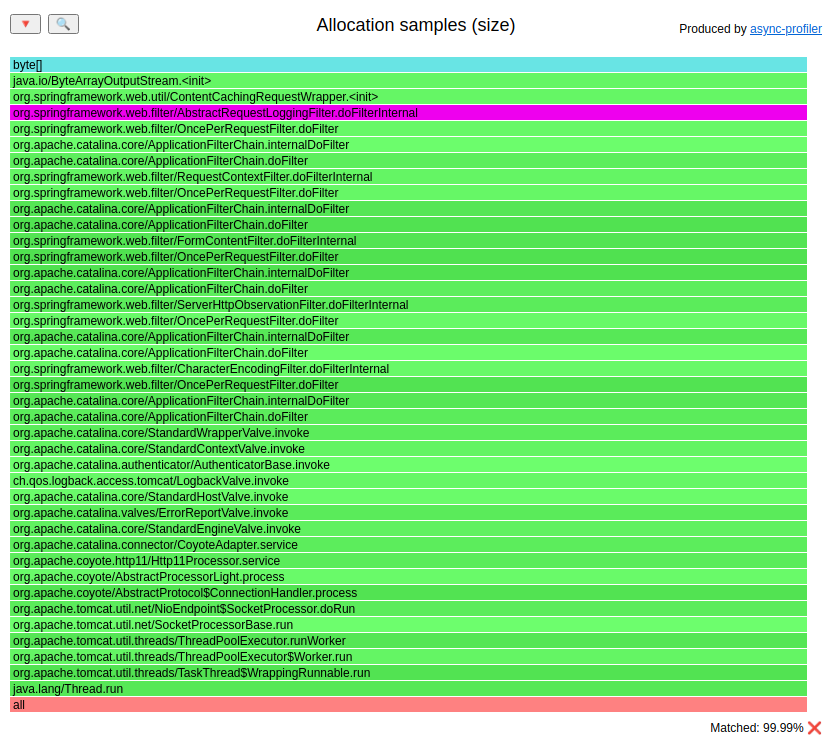

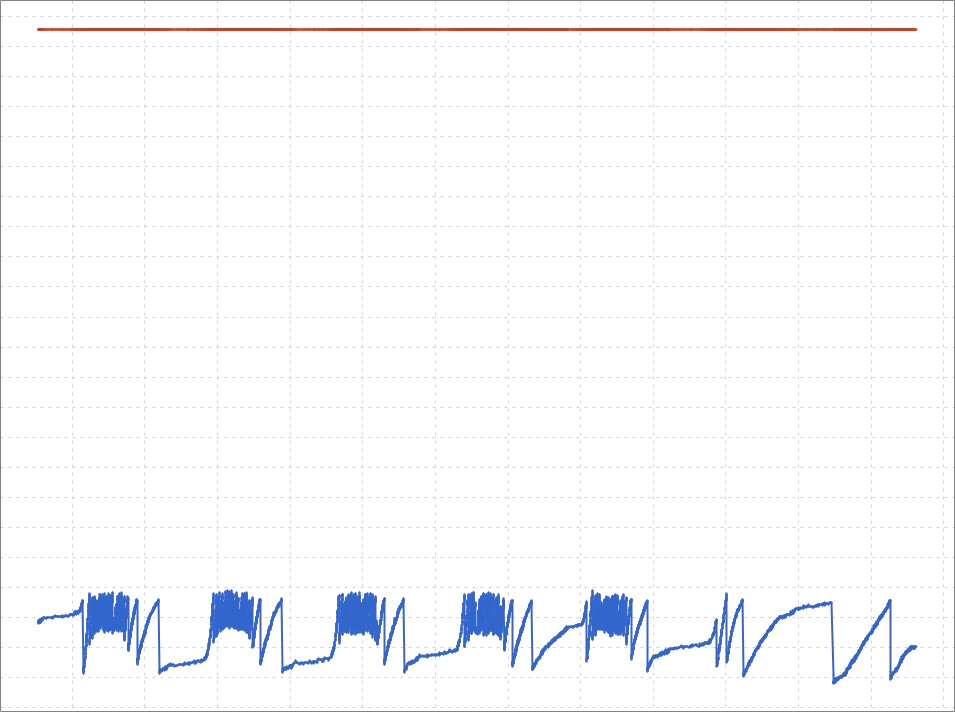

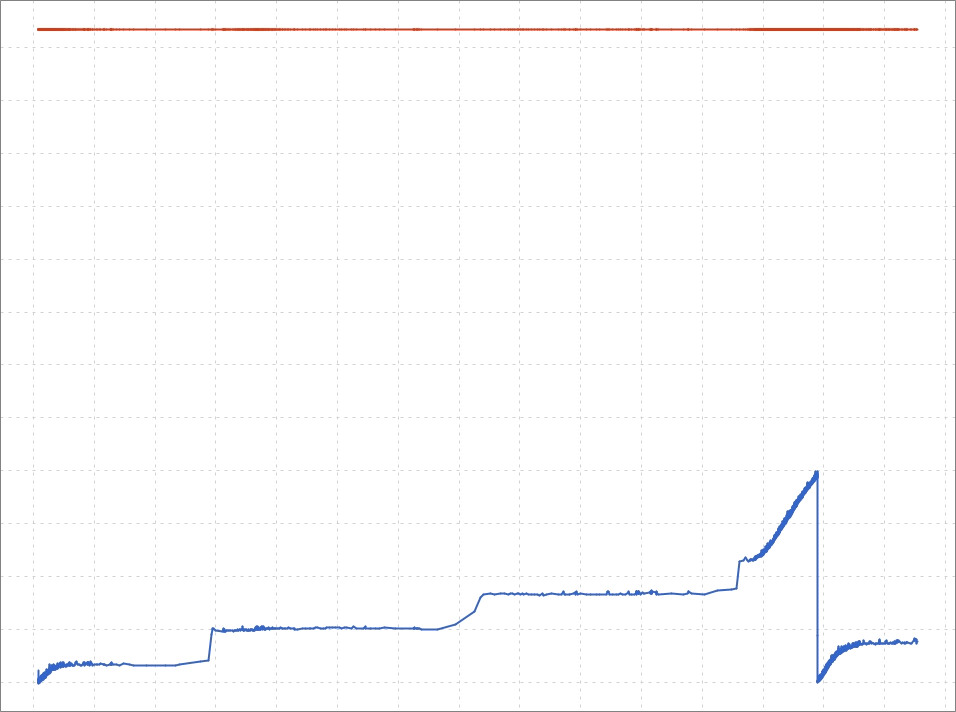

If we take the B value from each collection and put it on a chart, we can generate the Heap after GC chart. We can use such a chart to then detect if we have a memory leak: If a chart looks like those (from a 7 days period):

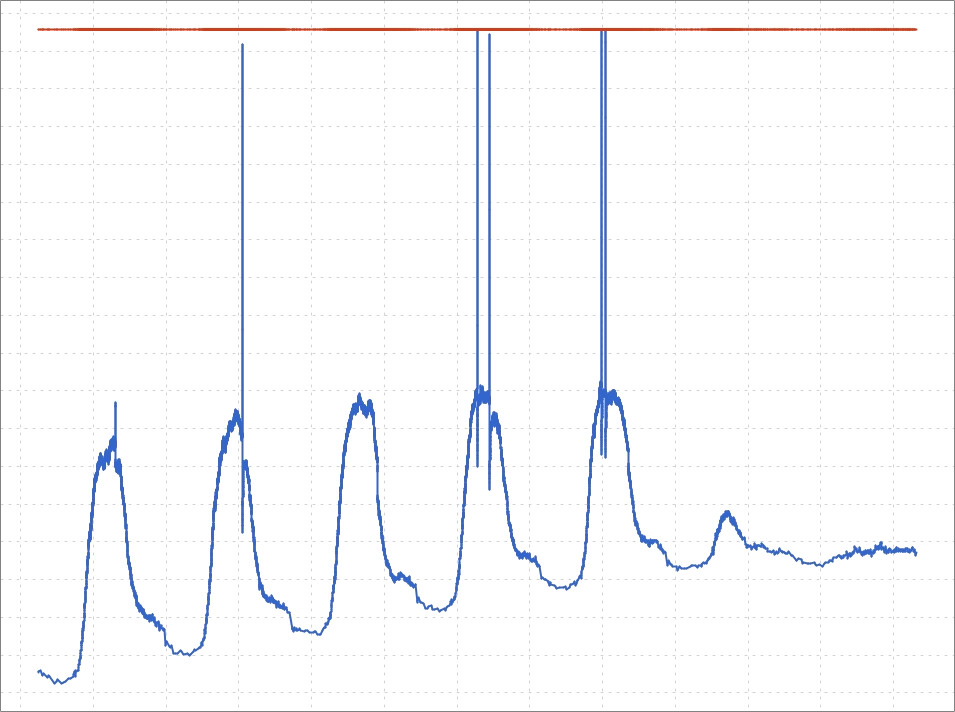

then there is no memory leak. The garbage collector can clean up the heap to the same level every day. The chart with a memory leak looks like the following one:

These spikes to the roof are to-space exhausted situations in the G1 algorithm; those are not OutOfMemoryErrors. After each of those spikes, there was a Full GC phase that is a failover in that algorithm.

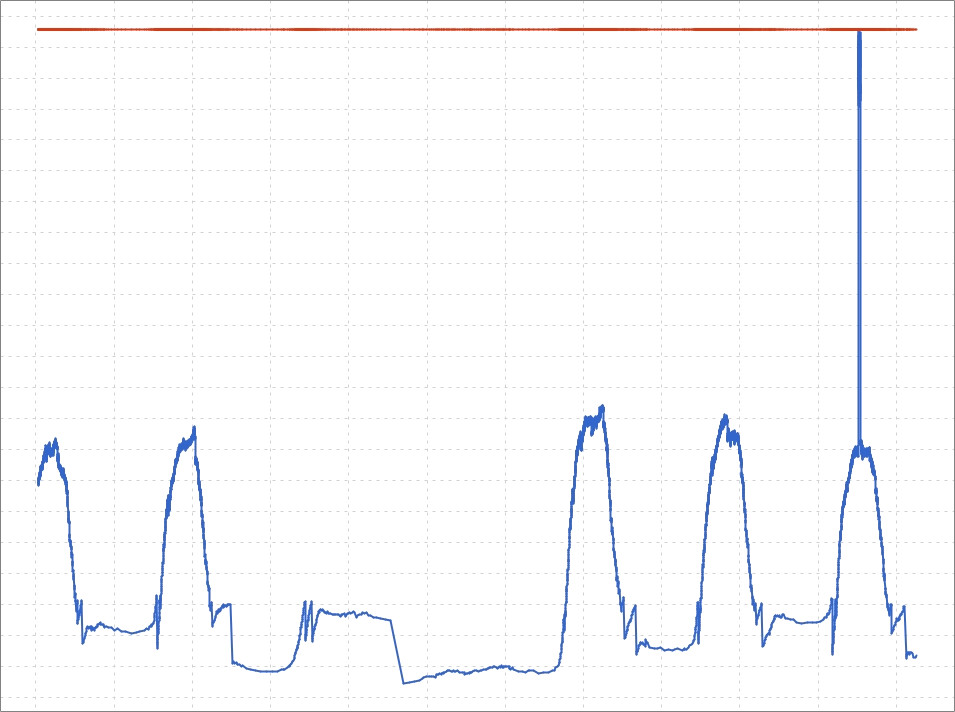

Here is an example of the not enough space on a heap problem:

This one spike is an OutOfMemoryError. One service was run with arguments that needed ~16GB on a heap to complete. Unfortunately -Xmx was set to 4GB. It is not a memory leak.

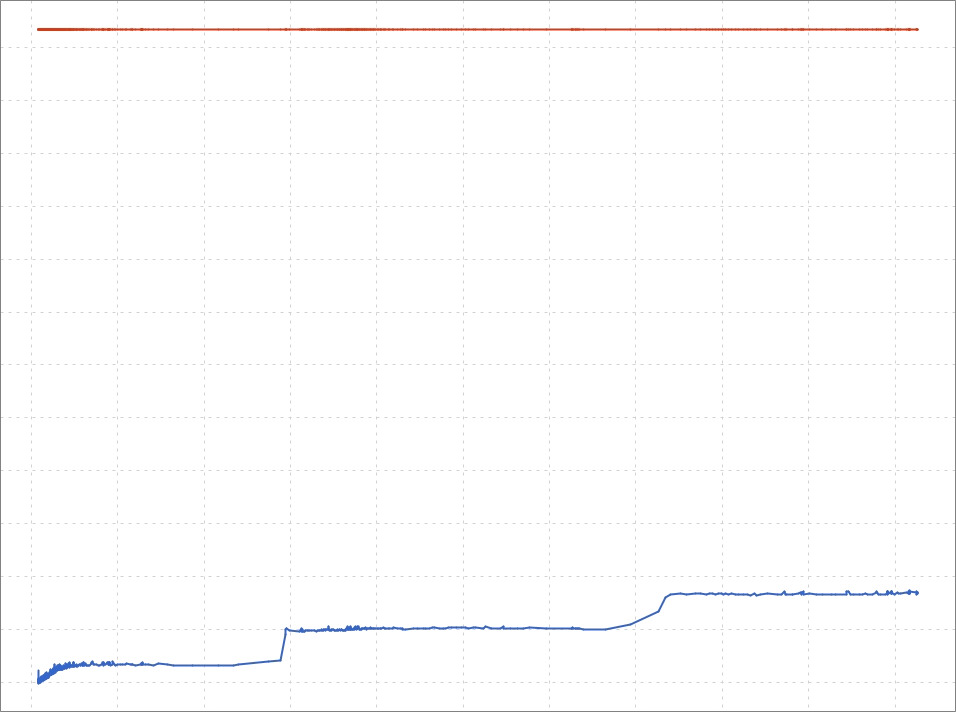

We must be careful if our application is entirely stateless and we use GC with young/old generations (like G1, parallel, serial, and CMS). We must remember that objects from a memory leak live in the old generation. In stateless applications, that part of the heap can be cleared even once a week. Here is an example recording 3 days of the stateless application:

It looks like a memory leak, the min(heap after GC) increasing every day, but if we look at the same chart with one additional day:

The GC cleared the heap to the previous level. This was done by an old-generation cleanup that didn’t happen in the previous days.

The Heap after GC chart can be generated by probing through JMX. The JVM gives that information via mBeans:

java.lang:type=GarbageCollector,name=G1 Young Generationjava.lang:type=GarbageCollector,name=G1 Old Generation

Both mBeans provide attributes with the name LastGcInfo from which we can extract the needed information.

Most memory leaks I discovered in recent years in enterprise applications were either in frameworks/libraries or in some kind of bridge between them. Recreating such an issue in our example application would require introducing a lot of strange dependencies, so I chose to recreate one custom-made heap memory leak I discovered a few years ago.

# preparation

curl http://localhost:8081/examples/leak/prepare

ab -n 1000 -c 4 http://localhost:8081/examples/leak/do-leak

# profiling

jcmd first-application-0.0.1-SNAPSHOT.jar GC.run

jcmd first-application-0.0.1-SNAPSHOT.jar GC.heap_info

./profiler.sh start -e alloc --live -f live.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000000 -c 4 http://localhost:8081/examples/leak/do-leak

jcmd first-application-0.0.1-SNAPSHOT.jar GC.run

jcmd first-application-0.0.1-SNAPSHOT.jar GC.heap_info

./profiler.sh stop -f live.jfr first-application-0.0.1-SNAPSHOT.jar

Let’s look at the output of the GC.heap_info commands that were invoked soon after running the GC:

garbage-first heap total 1048576K, used 50144K [0x00000000c0000000, 0x0000000100000000)

region size 1024K, 1 young (1024K), 0 survivors (0K)

Metaspace used 68750K, committed 69376K, reserved 1114112K

class space used 10054K, committed 10368K, reserved 1048576K

garbage-first heap total 1048576K, used 237806K [0x00000000c0000000, 0x0000000100000000)

region size 1024K, 1 young (1024K), 0 survivors (0K)

Metaspace used 68841K, committed 69440K, reserved 1114112K

class space used 10063K, committed 10368K, reserved 1048576K

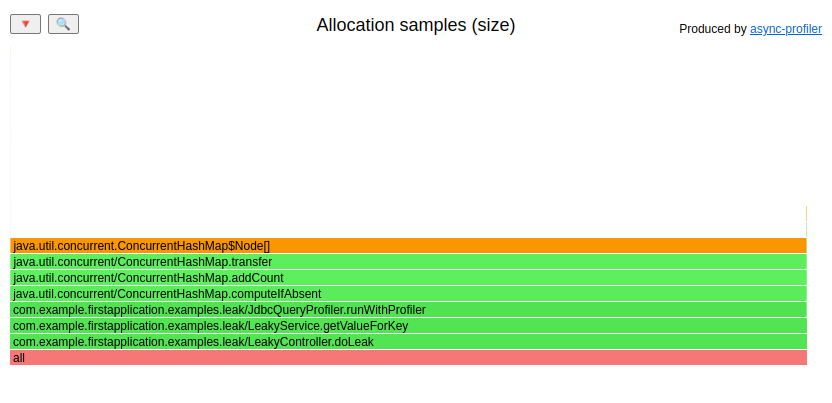

So invoking our do-leak request created ~183MB of objects that GC couldn’t free.

Let’s look at the allocation flame graph with the --live option enabled: (HTML)

The largest part of the leak is created in the JdbcQueryProfiler class; let’s look at the sources:

class JdbcQueryProfiler {

private final Map<String, ProfilingData> profilingResults = new ConcurrentHashMap<>();

<T> T runWithProfiler(String queryStr, Supplier<T> query) {

StopWatch stopWatch = new StopWatch();

stopWatch.start();

T ret = query.get();

stopWatch.stop();

profilingResults.computeIfAbsent(queryStr, ProfilingData::new).nextInvocation(stopWatch.getTotalTimeMillis());

return ret;

}

// ...

}

So that class calculates the execution time for each query and remembers it in

some ProfilingData structure that is placed in ConcurrentHashMap. That doesn’t look scary; as long as we use parametrized queries under our control, the map should have a finite size.

Let’s look at the usage of the runWithProfiler() method:

String getValueForKey(int key) {

String sql = "select a_value from LEAKY_ENTITY where a_Key = " + key;

return jdbcQueryProfiler.runWithProfiler(sql, () -> {

try {

return jdbcTemplate.queryForObject(sql, String.class);

} catch (EmptyResultDataAccessException e) {

return null;

}

});

}

So, well, so good; we are not using parameterized queries; we create new query strings for every key. This way

mentioned ConcurrentHashMap is growing with every new key passed to the getValueForKey method.

If you have a heap memory leak in your application, then you have two groups of objects:

- Live set - a group of objects that are still needed by your application

- Memory leak - a group of objects that are no longer needed

Garbage collectors cannot free the second group if there is at least one strong reference from the live set to the memory leak. The biggest problems with diagnosing memory leaks are:

- the fact that the object was created is not an issue - it was created because it was needed for something

- the fact that the mentioned reference was created is not an issue - it had some purpose too

- we need to understand why that reference was not removed from our application

The last one is not trivial. All the observability/profiling tools give us a great possibility to understand why some event has happened, but with memory leaks, we need to understand why something has not yet happened. Two additional tools come to our rescue:

- heap dump - shows us the current state of a heap - we can find out what kind of objects are there but shouldn’t be

- profiler - shows us where these objects were created

In this simple example, any of those tools is enough. In more complicated ones, I needed both to find the root cause of the problem. It is nice to finally have a tool that can profile memory leaks on production systems.

It is worth mentioning that the --live option is available only since async-profiler 2.9, it needs

JDK >= 11 and might still contain bugs. I didn’t have a chance to test it on any production system yet.

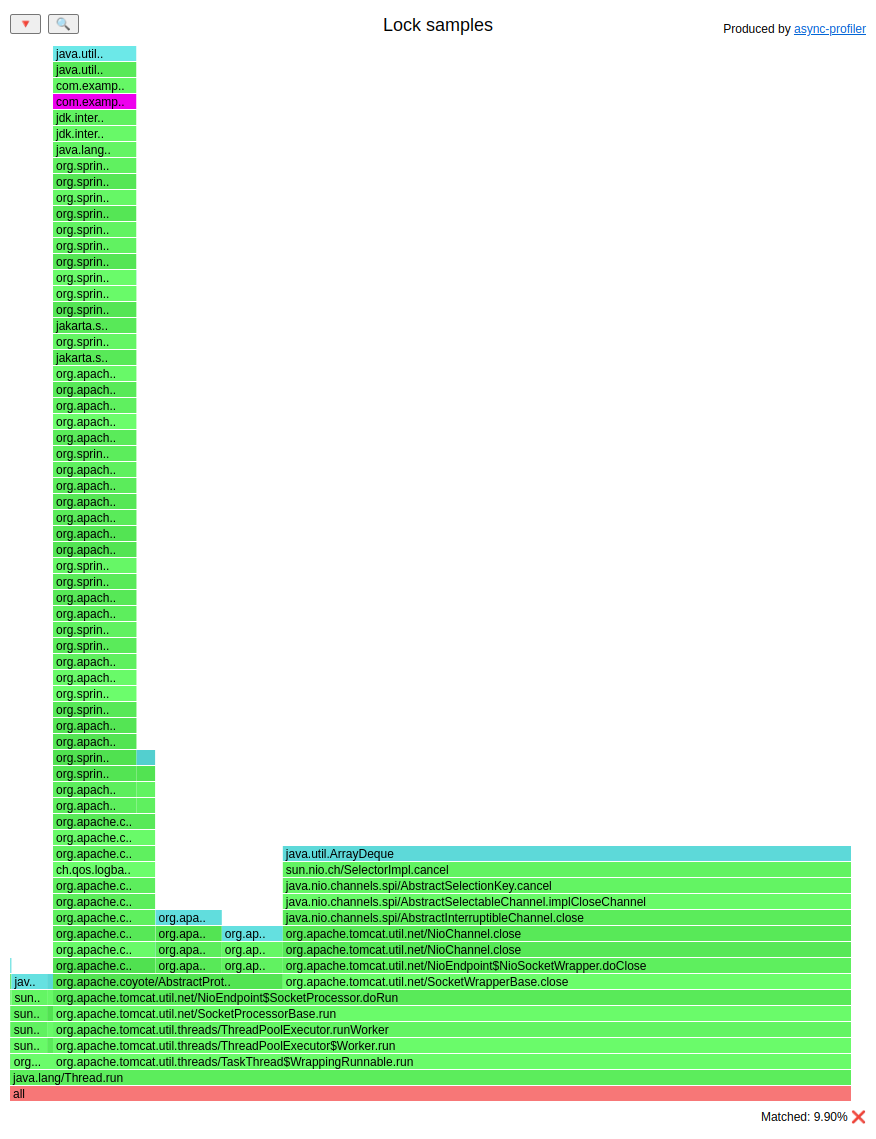

Locks

Async-profiler has a lock mode. This mode is useful when looking into look contention in our application.

Let’s try to use it and understand the internals of ConcurrentHashMap. The

get() method is obviously lock-free, but what about computeIfAbsent()?

Let’s profile a code that uses it:

class LockService {

private final Map<String, String> map = new ConcurrentHashMap<>();

LockService() {

String a = "AaAa";

String b = "BBBB";

log.info("Hashcode equals: {}", a.hashCode() == b.hashCode()); // true

map.computeIfAbsent(a, s -> a);

map.computeIfAbsent(b, s -> b);

}

void withLock(String key) {

map.computeIfAbsent(key, s -> key);

}

void withoutLock(String key) {

if (map.get(key) == null) {

map.computeIfAbsent(key, s -> key);

}

}

}

Let’s use lock mode to profile that:

# preparation

ab -n 100 -c 1 http://localhost:8081/examples/lock/with-lock

ab -n 100 -c 1 http://localhost:8081/examples/lock/without-lock

# profiling

./profiler.sh start -e lock -f lock.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100000 -c 100 http://localhost:8081/examples/lock/with-lock

ab -n 100000 -c 100 http://localhost:8081/examples/lock/without-lock

./profiler.sh stop -f lock.jfr first-application-0.0.1-SNAPSHOT.jar

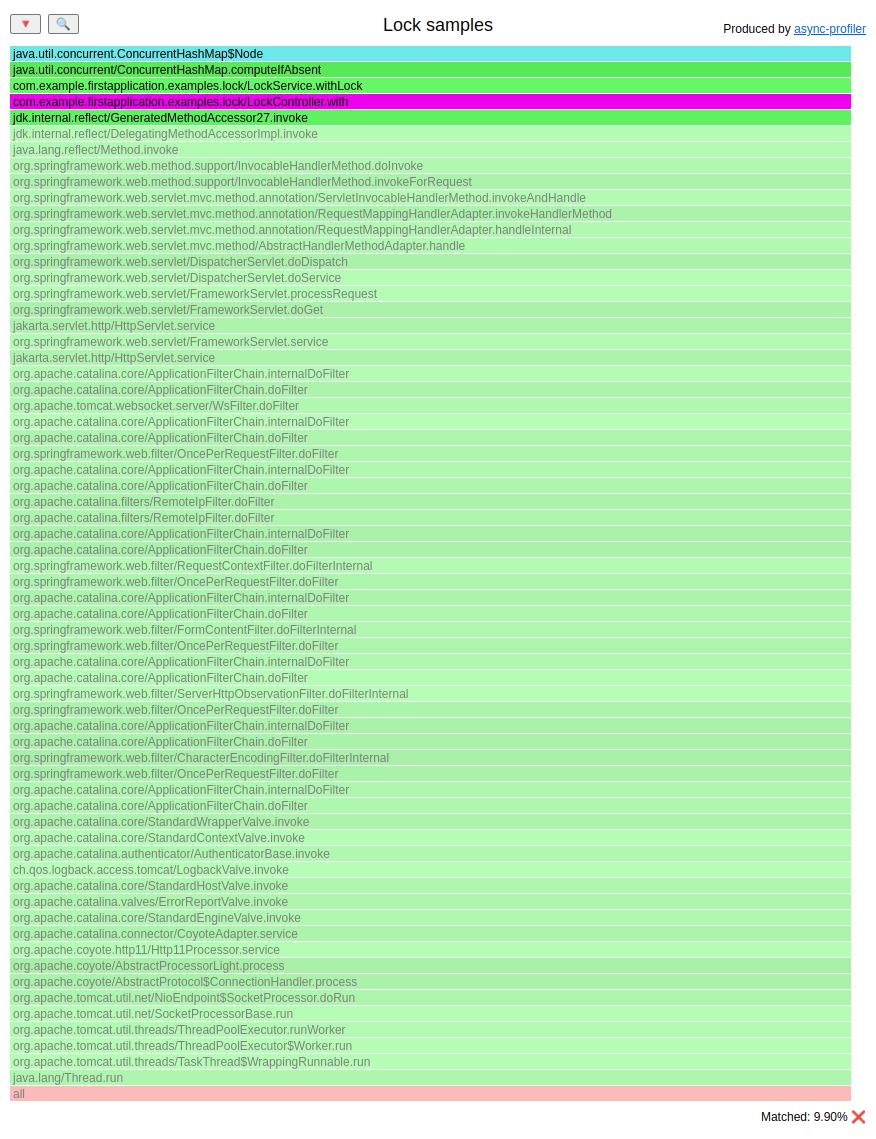

The lock flame graph: (HTML)

I highlighted the LockController occurrence. Let’s zoom it:

We see that only the withLock() method acquires locks. You can study the internals of the computeIfAbsent() method

to see that it might lock on hash collisions.

The easiest way to confirm this is by creating a small program that triggers hash collisions on purpose.

public static void main(String[] args) {

Map<String, String> map = new ConcurrentHashMap<>();

String a = "AaAa";

String b = "BBBB";

map.computeIfAbsent(a, s -> a);

// it enters the synchronized section here

map.computeIfAbsent(b, s -> b);

// it enters the synchronized section here, and all the following

// execution of computeIfAbsent with "BBBB" as a key.

map.computeIfAbsent(b, s -> b);

map.computeIfAbsent(b, s -> b);

map.computeIfAbsent(b, s -> b);

map.computeIfAbsent(b, s -> b);

}

If you observe a considerable lock contention in this method, and most of the

time a key is already in the map; then you may consider the approach used in the withoutLock() method.

Time to safepoint

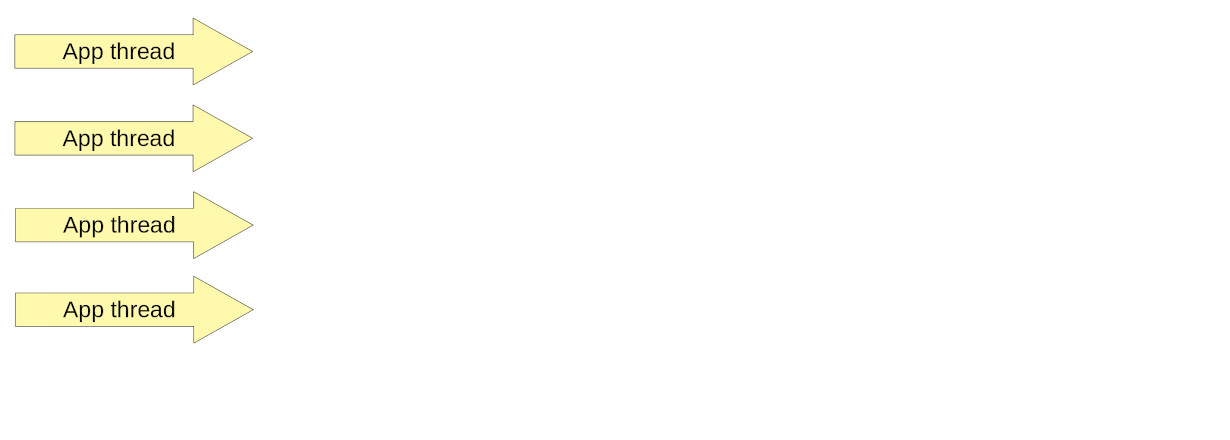

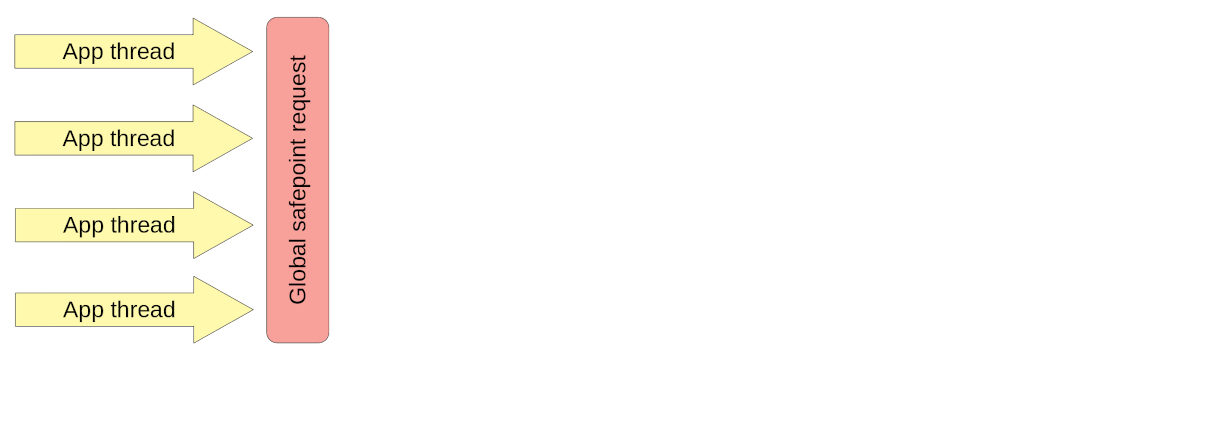

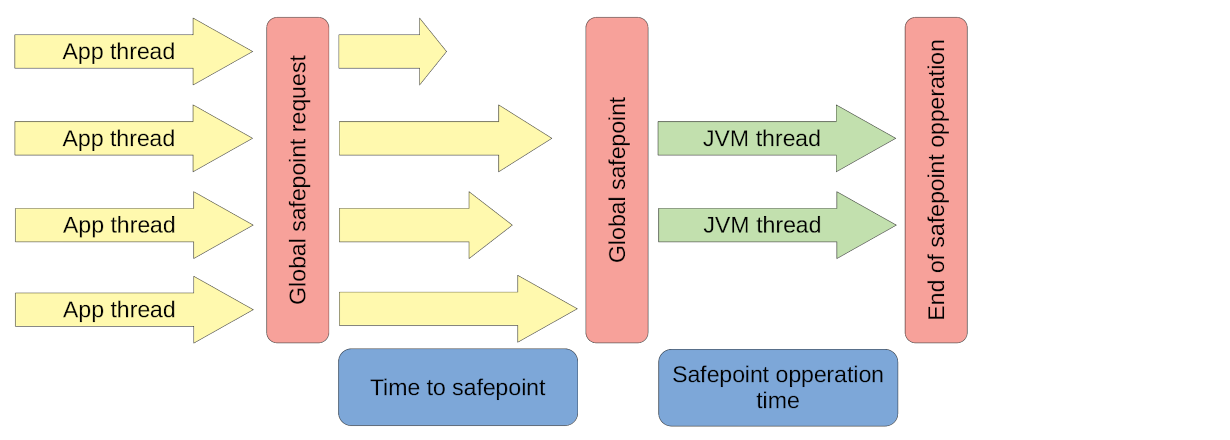

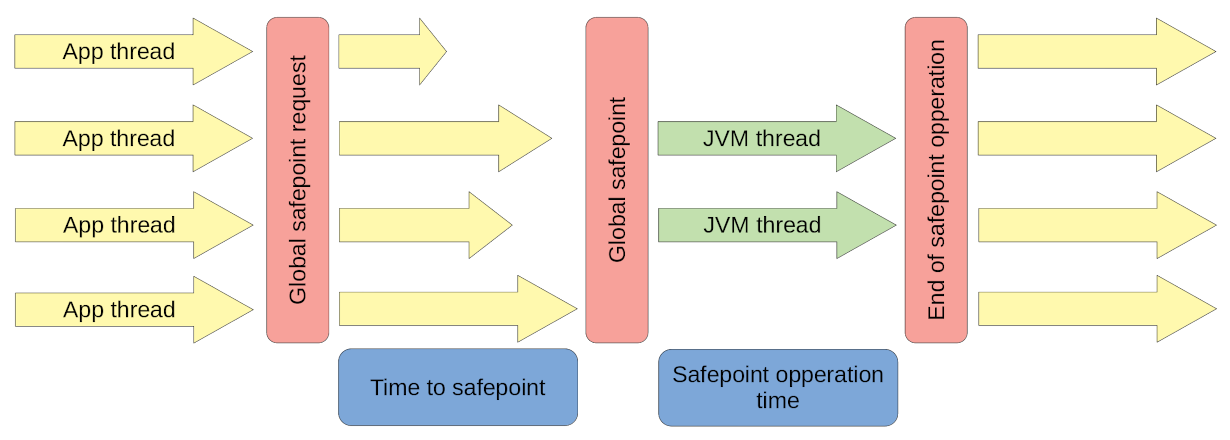

The common misconception in the Java world is that garbage collectors need a Stop-the-world (STW) phase to clean dead objects: But not only GC needs it. Other internal mechanisms require application threads to be paused. For example, the JVM needs an STW phase to deoptimize some compilations and to revoke biased locks. Let’s get a closer look at how the STW phase works.

On our JVM, there are running some application threads:

While running those threads from time to time, JVM needs to do some work in the STW phase. So it starts this phase, with a global safepoint request, which informs every thread to go to “sleep”:

Every thread has to find out about this. Stopping at a safepoint is cooperative: Each thread checks at certain points in the code if it needs to suspend. The time in which threads will be aware of an STW phase is different for every thread. Every thread has to wait for the slowest one. The time between starting an STW phase, and the slowest thread suspension, is called time to safepoint:

JVM threads can do the work that needs the STW phase only after every thread is asleep. The time when all application threads sleep, is called safepoint operation time:

When the JVM finishes its work, application threads are wakened up:

If the application suffers from long STW phases, then most of the time, those are GC cycles, and that information can be found in the GC logs or JFR. But the situation is more tricky if the application has one thread that slows down every other from reaching the safepoint.

# preparation

curl http://localhost:8081/examples/tts/start

ab -n 100 -c 1 http://localhost:8081/examples/tts/execute

# profiling

./profiler.sh start --ttsp -f tts.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 100 -c 1 http://localhost:8081/examples/tts/execute

./profiler.sh stop -f tts.jfr first-application-0.0.1-SNAPSHOT.jar

In safepoint logs (you need to run your JVM with the -Xlog:safepoint flag), we can see:

[105,372s][info ][safepoint ] Safepoint "ThreadDump", Time since last: 156842 ns, Reaching safepoint: 13381 ns, At safepoint: 120662 ns, Total: 134043 ns

[105,372s][info ][safepoint ] Safepoint "ThreadDump", Time since last: 157113 ns, Reaching safepoint: 14738 ns, At safepoint: 120252 ns, Total: 134990 ns

[105,373s][info ][safepoint ] Safepoint "ThreadDump", Time since last: 157676 ns, Reaching safepoint: 13700 ns, At safepoint: 120487 ns, Total: 134187 ns

[105,402s][info ][safepoint ] Safepoint "ThreadDump", Time since last: 159020 ns, Reaching safepoint: 29524545 ns, At safepoint: 160702 ns, Total: 29685247 ns

Reaching safepoint contains the time to safepoint. Most of the time, it is <15 ms, but we also see one outlier:

29 ms. Async-profiler in --ttsp mode collects samples between:

SafepointSynchronize::begin, andRuntimeService::record_safepoint_synchronized

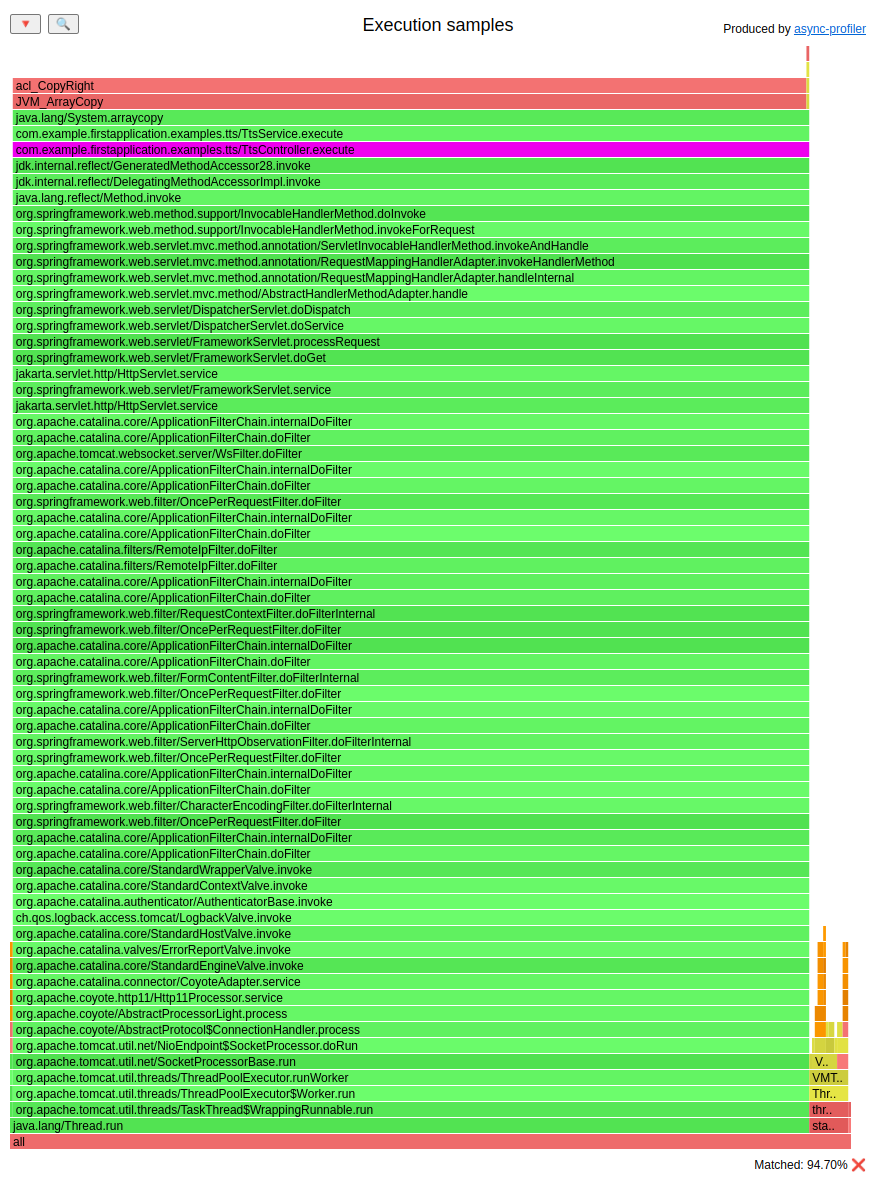

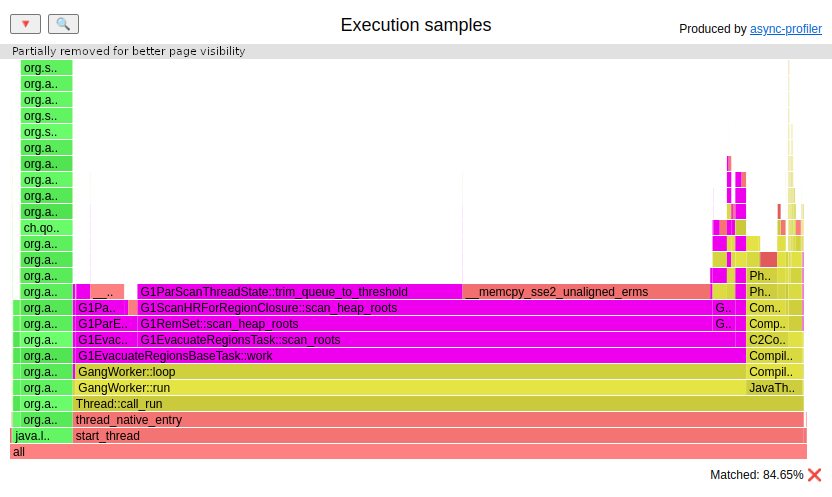

During that time, our application threads are trying to reach a safepoint: (HTML)

We can see that most of the gathered samples are executing arraycopy, invoked from TtsController.

The time to safepoint issues that I have approached so far are:

- arraycopy - as in our example

- old JDK + loops - since JDK 11u4 we have an loop strip mining optimization working correctly (after fixing JDK-8220374), before that if you had a counted loop, it could be compiled without any check for safepoint

- swap - when your application thread executes some work in thread_in_vm state (after calling some native method),

and during that execution, it waits for some pages to be swapped in/out, which can slow down reaching the safepoint

The solution for the arraycopy issue is to copy larger arrays by some custom method, which might use arraycopy for smaller sub-arrays. It will be a bit slower, but it will not slow down the whole application when reaching a safepoint is required.

For the swap issue, just disable the swap.

Methods

Async-profiler can instrument a method so that we can see all the stack traces with this method on the top. To achieve that, async-profiler uses instrumentation.

Big warning: It’s already pointed out in the README of the profiler that if you are not running

the profiler from agentpath, then the first instrumentation of a Java method can result in a code

cache flush. It’s not the fault of the async-profiler; it’s the nature of all instrumentation-based profilers

combined with JVM’s code. Here is a comment from the JVM sources:

// Deoptimize all compiled code that depends on the classes redefined. // // If the can_redefine_classes capability is obtained in the onload // phase then the compiler has recorded all dependencies from startup. // In that case we need only deoptimize and throw away all compiled code // that depends on the class. // // If can_redefine_classes is obtained sometime after the onload // phase then the dependency information may be incomplete. In that case // the first call to RedefineClasses causes all compiled code to be // thrown away. As can_redefine_classes has been obtained then // all future compilations will record dependencies so second and // subsequent calls to RedefineClasses need only throw away code // that depends on the class.

You can check the README PR discussion for more information on this topic. But let’s focus on the usage of the mode for our purposes. In this case, we could easily do it with a plain IDE debugger, but there are situations where something is happening only in one environment, or we are tracing some issues we do not know how to reproduce.

Since Spring beans are usually created during applications startup, let’s run our application that way:

java \

-agentpath:/path/to/libasyncProfiler.so=start,event="org.springframework.web.filter.AbstractRequestLoggingFilter.<init>" \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

The AbstractRequestLoggingFilter.<init> is simply a constructor. We are trying to find out

where such an object is created. After our application is started, we can execute such a command

in the profiler directory:

./profiler.sh stop first-application-0.0.1-SNAPSHOT.jar

It will print us to one stack trace:

--- Execution profile ---

Total samples : 1

--- 1 calls (100.00%), 1 sample

[ 0] org.springframework.web.filter.AbstractRequestLoggingFilter.<init>

[ 1] org.springframework.web.filter.CommonsRequestLoggingFilter.<init>

[ 2] com.example.firstapplication.examples.alloc.AllocConfiguration$1.<init>

[ 3] com.example.firstapplication.examples.alloc.AllocConfiguration.requestLoggingFilter

[ 4] com.example.firstapplication.examples.alloc.AllocConfiguration$$SpringCGLIB$$0.CGLIB$requestLoggingFilter$0

[ 5] com.example.firstapplication.examples.alloc.AllocConfiguration$$SpringCGLIB$$2.invoke

...

[24] org.springframework.beans.factory.support.AbstractBeanFactory.getBean

...

[33] org.springframework.boot.web.embedded.tomcat.TomcatStarter.onStartup

...

[58] org.springframework.boot.web.embedded.tomcat.TomcatWebServer.<init>

...

[78] org.springframework.boot.loader.JarLauncher.main

calls percent samples top

---------- ------- ------- ---

1 100.00% 1 org.springframework.web.filter.AbstractRequestLoggingFilter.<init>

We have all the information that we need. The object is created in AllocConfiguration during

creation of CommonsRequestLoggingFilter bean.

One of the other use cases where I used to use method profiling was finding memory leaks. I knew which types were leaking from the heap dump, and with method profiling, I could see where objects of these types were created. Consider this a fallback when the dedicated mode does not work.

Native functions

Not only can you trace Java code with the async-profiler but also a native one. That way of profiling doesn’t cause deoptimizations.

Some native functions are worth a better look; let’s cover them quickly.

Exceptions

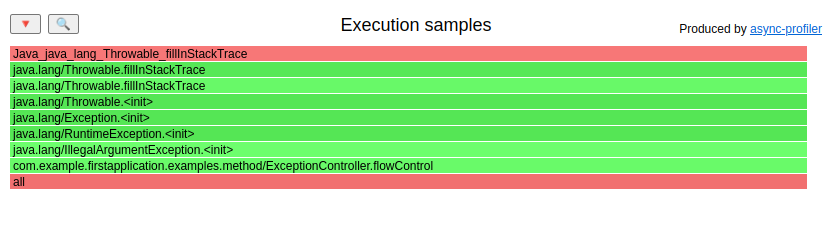

How many exceptions should be thrown if your application works without any outage/downtime and everything is stable? Exceptions should be thrown if something unexpected happens.

Unfortunately, I saw an application that used the exception-control-flow approach

more common in languages like Python. Creating a new

exception is a CPU-intensive operation since, by default, it fills the stack trace.

I once saw an application that consumed ~15% of its CPU time on just

creating new exceptions. You can use async-profiler in method mode with

event Java_java_lang_Throwable_fillInStackTrace if you want to see where exceptions are created.

Let’s start our application with profiler enabled from the start to see also how many exceptions are thrown during the startup of a Spring Boot application, just for fun:

java \

-agentpath:/path/to/libasyncProfiler.so=start,jfr,file=exceptions.jfr,event="Java_java_lang_Throwable_fillInStackTrace" \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

After the startup, let’s run:

ab -n 100 -c 1 http://localhost:8081/examples/exc/

./profiler.sh stop -f exceptions.jfr first-application-0.0.1-SNAPSHOT.jar

The flame graph is too large to post it here as an image, sorry. Spring Boot, in that case, threw 12478 exceptions. You can play with HTML. Let’s focus on our synthetic controller:

Source code of the controller:

@GetMapping("/")

String flowControl() {

ThreadLocalRandom random = ThreadLocalRandom.current();

try {

if (!random.nextBoolean()) {

throw new IllegalArgumentException("Random returned false");

}

} catch (IllegalArgumentException e) {

return "EXC";

}

return "OK";

}

If you care about performance, don’t use the exception-control-flow approach. If you really need such a code, reuse exception options like ANTLR or create an exception constructor that doesn’t fill the stack trace:

/**

* Constructs a new exception with the specified detail message,

* cause, suppression enabled or disabled, and writable stack

* trace enabled or disabled.

*

* @param message the detail message.

* @param cause the cause. (A {@code null} value is permitted,

* and indicates that the cause is nonexistent or unknown.)

* @param enableSuppression whether or not suppression is enabled

* or disabled

* @param writableStackTrace whether or not the stack trace should

* be writable

* @since 1.7

*/

protected Exception(String message, Throwable cause,

boolean enableSuppression,

boolean writableStackTrace) {

super(message, cause, enableSuppression, writableStackTrace);

}

Just set writableStackTrace to false. It will be rather ugly but faster.

G1GC humongous allocation

We already saw how to detect humongous objects with allocation mode. Since

async-profiler can also instrument JVM code, and allocations of a humongous objects are

nothing else than invocations of C++ code, we can take advantage of that.

If you want to check where humongous objects are allocated, you can use native functions mode

with event G1CollectedHeap::humongous_obj_allocate. This approach may have lower

overhead but won’t give you sizes of allocated objects.

# little warmup

ab -n 2 -c 1 http://localhost:8081/examples/alloc/

# profiling time

./profiler.sh start -e "G1CollectedHeap::humongous_obj_allocate" -f humongous.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000 -c 1 http://localhost:8081/examples/alloc/

./profiler.sh stop -f humongous.jfr first-application-0.0.1-SNAPSHOT.jar

The flame graph is almost the same as in alloc mode; we can see some JVM yellow frames this time too:

(HTML)

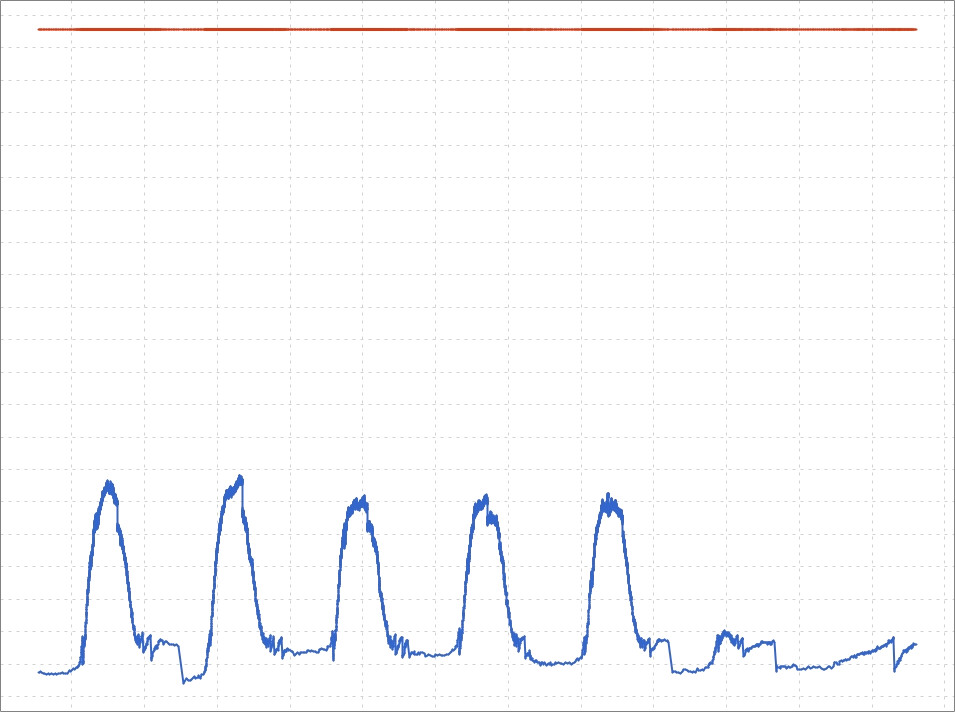

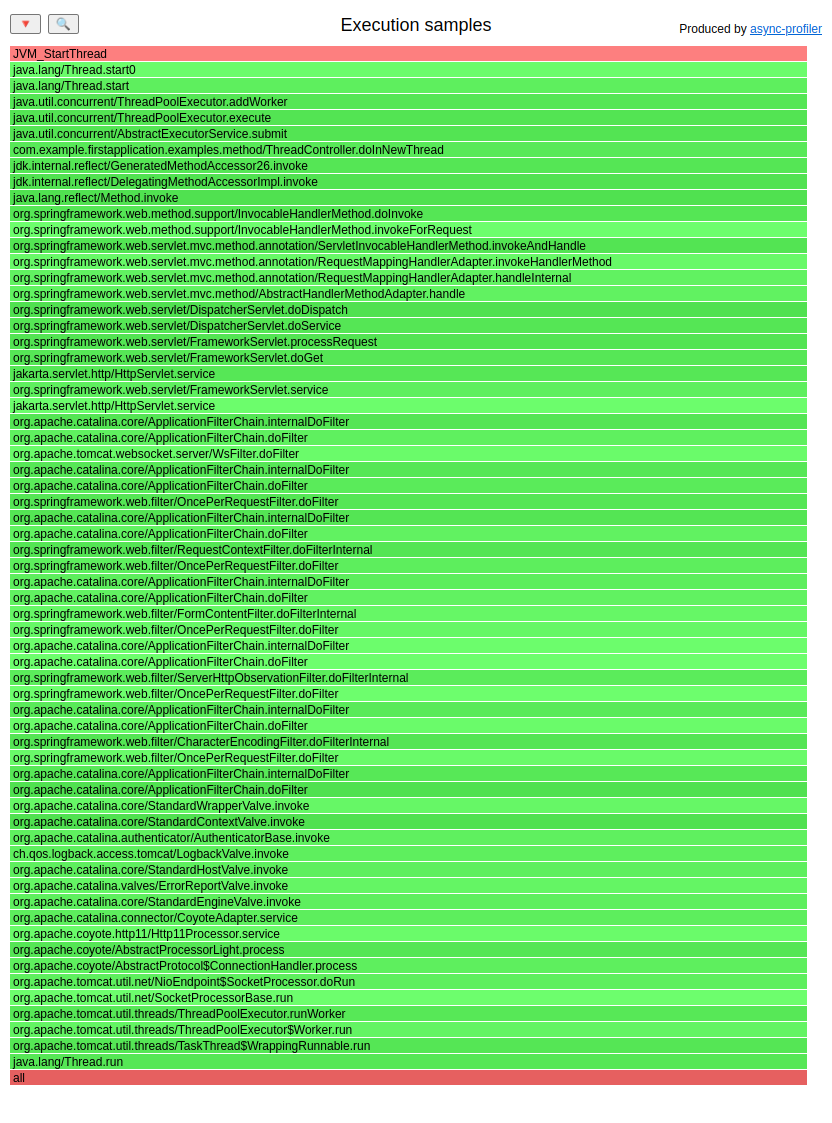

Thread start

Starting a platform thread is an expensive operation too. The number of started threads can be easily monitored with any JMX-based monitoring tool like JMC. Here is the MBean with the value of all the created threads:

java.lang:type=Threading

attribute=TotalStartedThreadCount

If you monitor that value and the chart like that:

Then you might want to check who is creating those short-living threads:

We use async-profiler with the JVM_StartThread event in native functions mode for this purpose:

# little warmup

ab -n 100 -c 1 http://localhost:8081/examples/thread/

# profiling time

./profiler.sh start -e "JVM_StartThread" -f threads.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000 -c 1 http://localhost:8081/examples/thread/

./profiler.sh stop -f threads.jfr first-application-0.0.1-SNAPSHOT.jar

The flame graph: (HTML)

This flame graph is not really complicated. But it is only a small example. In real life, such flame graphs are larger.

The code responsible for the thread creation observed in the flame graph is the following:

@SneakyThrows

@GetMapping("/")

String doInNewThread() {

ExecutorService threadPool = Executors.newFixedThreadPool(1);

return threadPool.submit(() -> {

return "OK";

}).get();

}

And yes, I saw such a pattern in a real production application. The intention was to have a fixed thread pool and delegate tasks to it, but by mistake, someone created that pool for every request.

Class loading

Similar to creating short-living threads, I saw an application that created plenty of short-living class definitions. I know there are some use cases for such behavior, But it has been an accident in this case. You can monitor the number of loaded classes with JMX:

java.lang:type=ClassLoading

attribute=TotalLoadedClassCount

There are some internals of the JVM, like reflection or debugging, which are used in a variety of frameworks, that can generate

new class definitions during runtime: So increasing that number (even after warmup)

doesn’t mean that we have a problem already. But if TotalLoadedClassCount is much higher

than LoadedClassCount, then we might have a problem. You can find the creator of those classes with method mode and

the event: Java_java_lang_ClassLoader_defineClass1.

To be honest, I saw such an issue only once and cannot reproduce it now. Making a synthetic example for this use-case seems wrong, so I will just keep you with the knowledge that there is such a possibility, especially if you purposefully create classes dynamically.

Perf events

Async-profiler can also help you with low-level diagnosis where you want to correlate perf events with Java code:

context-switches- to find out which parts of your Java code do context switchingcache-misses- which part of your code can stall due to cache misses - this information is harder to analyze if you have many context switchesLLC-load-misses- which part of your code needs a lot of data directly from RAM which is not cached- …

I want to describe the three in more detail in the following.

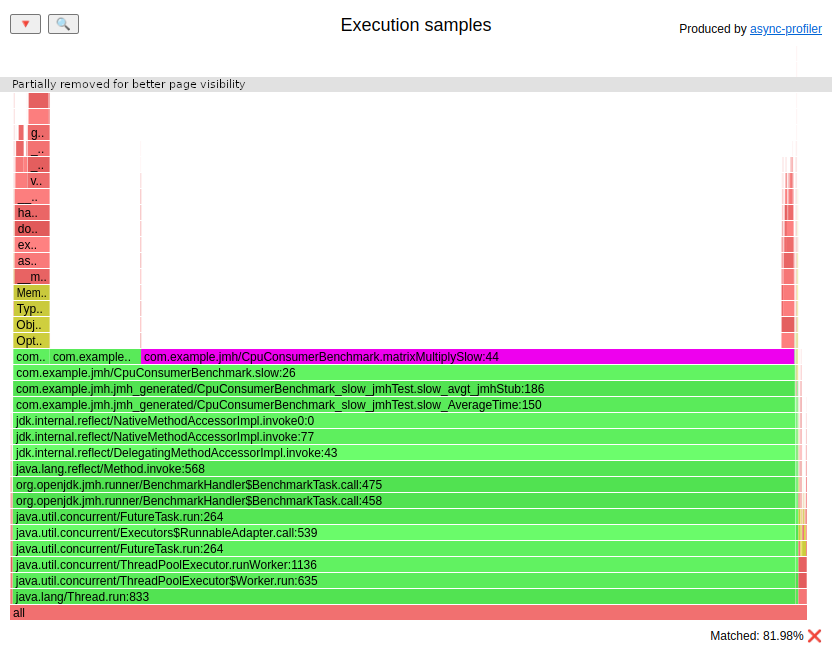

Cache misses

Let’s return to the example with matrix multiplication from the CPU - a bit harder section. I usually start by looking at basic CPU performance counters to see what our CPU is doing in the slow and the fast multiplication. This is the textbook example of cache misses and their importance for performance. I like to start with the JMH test to profile the specific code properly.

I’ve prepared such a benchmark in the jmh-suite module. Let’s run it with the perf profiler:

java -jar jmh-suite/target/benchmarks.jar -prof perf

The fast algorithm (I’ve cut the output to the most interesting metrics):

20 544,42 msec task-clock # 1,008 CPUs utilized

49 510 157 799 L1-dcache-loads # 2,410 G/sec (38,55%)

9 300 675 824 L1-dcache-load-misses # 18,79% of all L1-dcache accesses (38,55%)

1 635 877 333 LLC-loads # 79,626 M/sec (30,80%)

27 833 149 LLC-load-misses # 1,70% of all LL-cache accesses (30,76%)

The slow one:

22 291,74 msec task-clock # 1,008 CPUs utilized

71 632 332 204 L1-dcache-loads # 3,213 G/sec (38,51%)

29 718 804 848 L1-dcache-load-misses # 41,49% of all L1-dcache accesses (38,50%)

6 909 042 687 LLC-loads # 309,937 M/sec (30,79%)

10 043 405 LLC-load-misses # 0,15% of all LL-cache accesses (30,79%)

The slower algorithm has three times more L1 data cache misses and over four times more last-level cache loads. We can now use async-profiler in three different modes:

java -jar jmh-suite/target/benchmarks.jar -prof async:libPath=/path/to/libasyncProfiler.so\;event=cache-misses\;output=jfr

java -jar jmh-suite/target/benchmarks.jar -prof async:libPath==/path/to/libasyncProfiler.so\;event=L1-dcache-load-misses\;output=jfr

java -jar jmh-suite/target/benchmarks.jar -prof async:libPath==/path/to/libasyncProfiler.so\;event=LLC-load-misses\;output=jfr

All three flame graphs are very similar; let’s take a look at cache-misses: (HTML)

I added the line numbers this time, so we could see exactly where the problem was. ~82% of cache misses occurred in the same line:

sum += a[i][k] * b[k][j];

This line is nested inside three loops. The order of loops is i, j, k. If we unroll the last loop four times

we would get the following:

sum += a[i][k + 0] * b[k + 0][j];

sum += a[i][k + 1] * b[k + 1][j];

sum += a[i][k + 2] * b[k + 2][j];

sum += a[i][k + 3] * b[k + 3][j];

Let’s look at this code from a memory layout perspective. The array a[i] is a contiguous part of memory. That’s how Java

allocates arrays. Elements a[i][k + 0] … a[i][k + 3] are very close to each other and are loaded

sequentially. The CPU loads small blocks of memory from RAM into the cache if the block is not already there.

Accessing data in a sequential pattern is, therefore, far less expensive.

The access pattern to table b is completely different. The b[k + x] is just a pointer to a table. It is

somewhere on the heap, but where exactly? Well, we cannot control that. Element b[k + 0][j] may be in a completely

different place than b[k + 1][j]. That’s unfortunate for the CPU. This is why the speed difference between

both matrix multiplications is not as large as expected.

Memory access patterns are the key here. The matrixMultiplyFaster algorithm accesses the table a mostly sequentially, which is why it’s faster.

I don’t want to go into detail about what is happening in the CPU with these algorithms. This post aims to teach the usage of async-profiler, not CPU architecture and algorithm engineering. If you want to go deeper with that knowledge, a very good book for a start is Denis Bakhvalov - Performance Analysis and Tuning on Modern CPUs. It’s not about Java, but I cannot recommend any Java-centric book related to CPU architecture, as it’s still a relatively niche topic. I know that two very good performance engineers are writing one now. When it is published, I will paste a link here.

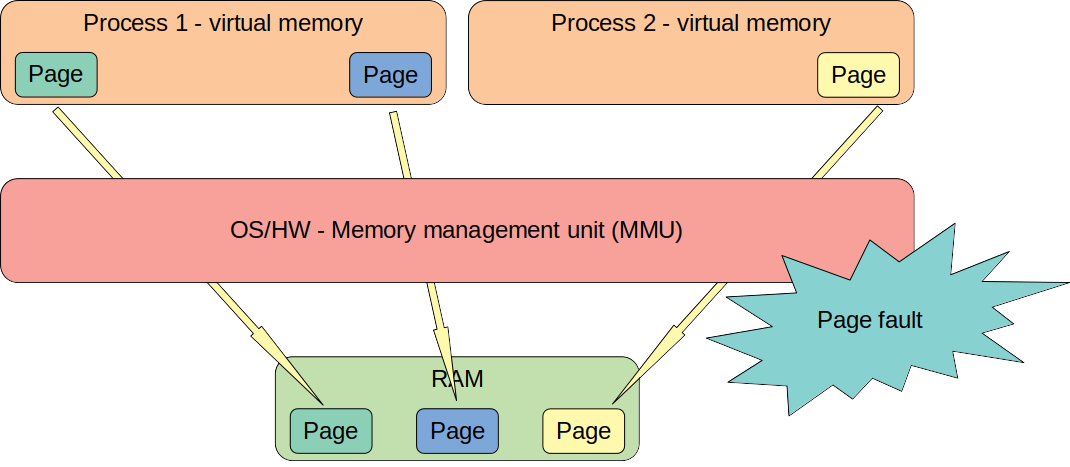

Page faults

Every process running on Linux contains its own virtual memory. If a process needs more

memory, it invokes functions like malloc or mmap. The OS guarantees the returned memory to be readable/writable by the current process.

But this does not mean that any block of physical RAM has been reserved for the process.

The OS is smart enough to decide whether that fault should be converted into a SEGFAULT or should trigger the kernel to map RAM to the process’s virtual memory because it was previously promised to the process.

Java is a process from an OS perspective, nothing less, nothing more. Knowing that we can trace

page fault events to detect why our application consumes more RAM. It may be a native memory

leak or some framework/library/JVM bug.

But this is not perfect for tracing leaks since it shows every request for additional RAM,

including ones that may be freed in the future. I know that Andrei Pangin is working

on a native memory leak detector that will trace allocations that haven’t been freed, but for

now, that feature is not in the latest release.

As an example, let’s run our application with and without -XX:+AlwaysPreTouch,

forcing the JVM to access all allocated memory after requesting it from the OS.

This allows us to find where Java needs more RAM after startup. We will use the heap memory leak that we used

before:

java -Xmx1G -Xms1G -XX:+AlwaysPreTouch \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

In the other console, let’s do the following:

ab -n 10000 -c 4 http://localhost:8081/examples/leak/do-leak

./profiler.sh start -e page-faults -f page-faults-apt-on.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000000 -c 4 http://localhost:8081/examples/leak/do-leak

./profiler.sh stop -f page-faults-apt-on.jfr first-application-0.0.1-SNAPSHOT.jar

Now let’s do the same without -XX:+AlwaysPreTouch:

java -Xmx1G -Xms1G \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

In the other console, let’s execute the following:

ab -n 10000 -c 4 http://localhost:8081/examples/leak/do-leak

./profiler.sh start -e page-faults -f page-faults-apt-off.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000000 -c 4 http://localhost:8081/examples/leak/do-leak

./profiler.sh stop -f page-faults-apt-off.jfr first-application-0.0.1-SNAPSHOT.jar

Flame graph without -XX:+AlwaysPreTouch: (HTML)

Most of the need for additional RAM is acquired in GC threads, but there are some page faults in our Java code (big green flame). These page faults can hurt your performance and make your latency less predictable.

Flame graph with -XX:+AlwaysPreTouch: (HTML)

Almost all the frames needing additional RAM now belong to compiler threads. This is due the code heap growing with the compilation of new methods.

I was able to isolate and recreate the memory leak that I described in JDK-8240723 with that mode.

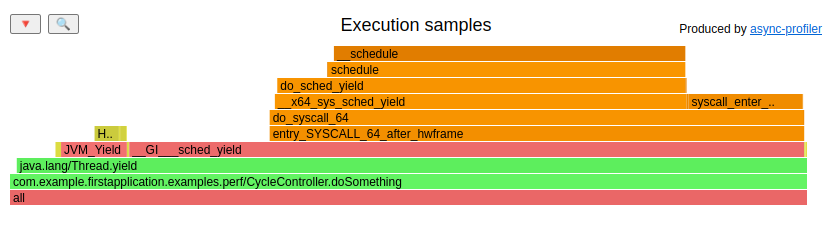

Cycles

If you need better visibility of what your kernel is doing, then you may consider choosing the

cycles event instead of cpu. This may be useful for low-latency applications

or while chasing bugs in the kernel (those also exist). Let’s see the difference:

# warmup

curl -v http://localhost:8081/examples/cycles/

# profiling

./profiler.sh start -e cpu -f cycles-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

curl -v http://localhost:8081/examples/cycles/

./profiler.sh stop -f cycles-cpu.jfr first-application-0.0.1-SNAPSHOT.jar

./profiler.sh start -e cycles -f cycles-cycles.jfr first-application-0.0.1-SNAPSHOT.jar

curl -v http://localhost:8081/examples/cycles/

./profiler.sh stop -f cycles-cycles.jfr first-application-0.0.1-SNAPSHOT.jar

The flame graph for cpu profiling:

(HTML)

Corresponding profile for cycles event:

(HTML)

As we can see, the cycles profile is more detailed.

Native memory leaks

With the 4.0 Async-profiler release, we can use the new nativemem mode which:

… records

malloc,realloc,callocandfreecalls with the addresses, so that allocations can be matched with frees.

This mode is extremely helpful with native memory leak detection. I already wrote an article on this topic. Now let’s just focus on usage of Async-profiler for a problem that I had in the past.

Our application is run with 1GB fixed heap size:

java -Xmx1G -Xms1G -XX:+AlwaysPreTouch \

-jar first-application/target/first-application-0.0.1-SNAPSHOT.jar

This native memory “leak” I want to show you is correlated with AWS S3 uploads. To make it easier to recreate let’s run localstacks on our PC and create a proper bucket and a file to upload:

docker run --rm -it -p 4566:4566 -p 4571:4571 localstack/localstack

aws --endpoint-url=http://localhost:4566 s3 mb s3://temp-bucket

dd if=/dev/zero of=/tmp/to_upload.tmp bs=1M count=15

Let’s invoke our app:

ab -n 1 http://localhost:8081/examples/aws/upload

Now let’s check how much memory is used by the application from an OS perspective:

jcmd first-application-0.0.1-SNAPSHOT.jar GC.run

smem -c "pid command rss pss" -a -P "first-application-0.0.1-SNAPSHOT.jar"

The output:

PID Command RSS PSS

18206 /home/pasq/JDK/amazon-corretto-17.0.1.12.1-linux-x64/bin/ 1388836 1372957

Let’s invoke more of this endpoint with the profiler attached (the profiler.sh script is gone, we now use asprof executable):

./asprof start -e nativemem -f nativemem.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 200 -c 10 http://localhost:8081/examples/aws/upload

jcmd first-application-0.0.1-SNAPSHOT.jar GC.run

./asprof stop -f nativemem.jfr first-application-0.0.1-SNAPSHOT.jar

Let’s check how much memory is used now:

smem -c "pid command rss pss" -a -P "first-application-0.0.1-SNAPSHOT.jar"

The output:

PID Command RSS PSS

18206 /home/pasq/JDK/amazon-corretto-17.0.1.12.1-linux-x64/bin/ 1632140 1616206

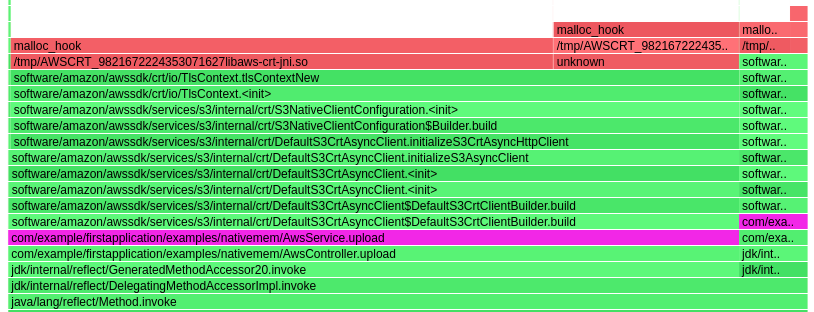

We can clearly see that both RSS and PSS grew. Let’s see what data were gathered by the profiler. Let’s convert JFR to a flame graph first:

./jfrconv --total --nativemem --leak nativemem.jfr nativemem.html

(HTML)

We can see that nativemem mode blames the AwsService.upload method for the “leak”. In the HTML version you can see also other AWS-related allocations without any Java stack traces,

but let’s focus on our code:

void upload() {

S3CrtAsyncClientBuilder s3CrtAsyncClientBuilder = S3AsyncClient.crtBuilder()

.endpointOverride(new URI("http://127.0.0.1:4566"))

.credentialsProvider(StaticCredentialsProvider.create(AwsBasicCredentials.create(ACCESS_KEY_ID, SECRET_ACCESS_KEY)))

.region(REGION);

try (S3AsyncClient s3Client = s3CrtAsyncClientBuilder.build()) {

s3Client

.putObject(

req -> req.bucket(BUCKET).key(NAME),

AsyncRequestBody.fromFile(Paths.get(FILE_TO_UPLOAD)))

.join();

}

}

We can also see (on the flame graph) that the native memory allocation was done in the DefaultS3CrtClientBuilder.build method. That line is covered with try-with-resources,

so the close method on the returned object should be invoked automatically. The method is invoked, but it doesn’t clean all the native allocations done by the builder.

This issue I found with AWS S3 libraries with the following versions:

<dependency>

<groupId>software.amazon.awssdk</groupId>

<artifactId>s3</artifactId>

<version>2.25.23</version>

</dependency>

<dependency>

<groupId>software.amazon.awssdk.crt</groupId>

<artifactId>aws-crt</artifactId>

<version>0.29.14</version>

</dependency>

The behavior may vary with different versions. You can check how it looks with the newest ones.

Filtering single request

Why aggregated results are not enough

So far, we have been looking at the profile of a whole application. But what if the app works well but there are some slower requests from time to time, and we want to know why? In such an application, where one request is handled by one thread, we can extract the profile of a single request. The JFR file contains all the information needed; we just need to filter them out. To do it, we need to have a log that will tell us which thread was responsible for the execution of the request at the time of the execution. Tomcat, embedded into Spring Boot, has access logs with all that information.

I configured our example application with access logs in the format:

[%t] [%r] [%s] [%D ms] [%I]

Here is a short explanation of that magic:

%t- time of finishing handling of the request%r- requested URI%s- response status code%D- duration time in milliseconds%I- thread that handled request

Let’s see it in action.

# warmup

ab -n 20 -c 4 http://localhost:8081/examples/filtering/

# profiling of the first request

./profiler.sh start -e wall -f filtering.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 50 -c 4 http://localhost:8081/examples/filtering/

./profiler.sh stop -f filtering.jfr first-application-0.0.1-SNAPSHOT.jar

In the access logs, we can spot faster and slower requests:

[05/Dec/2022:18:41:57 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [1044 ms] [http-nio-8081-exec-2]

[05/Dec/2022:18:41:57 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [2779 ms] [http-nio-8081-exec-5]

[05/Dec/2022:18:41:58 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [1048 ms] [http-nio-8081-exec-8]

[05/Dec/2022:18:41:58 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [1052 ms] [http-nio-8081-exec-9]

[05/Dec/2022:18:41:59 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [2829 ms] [http-nio-8081-exec-7]

[05/Dec/2022:18:41:59 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [1058 ms] [http-nio-8081-exec-1]

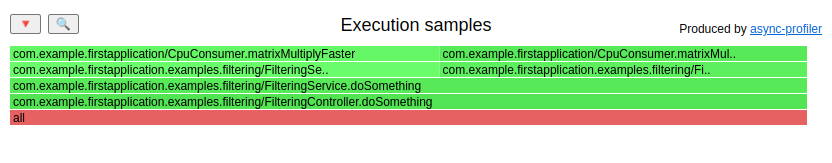

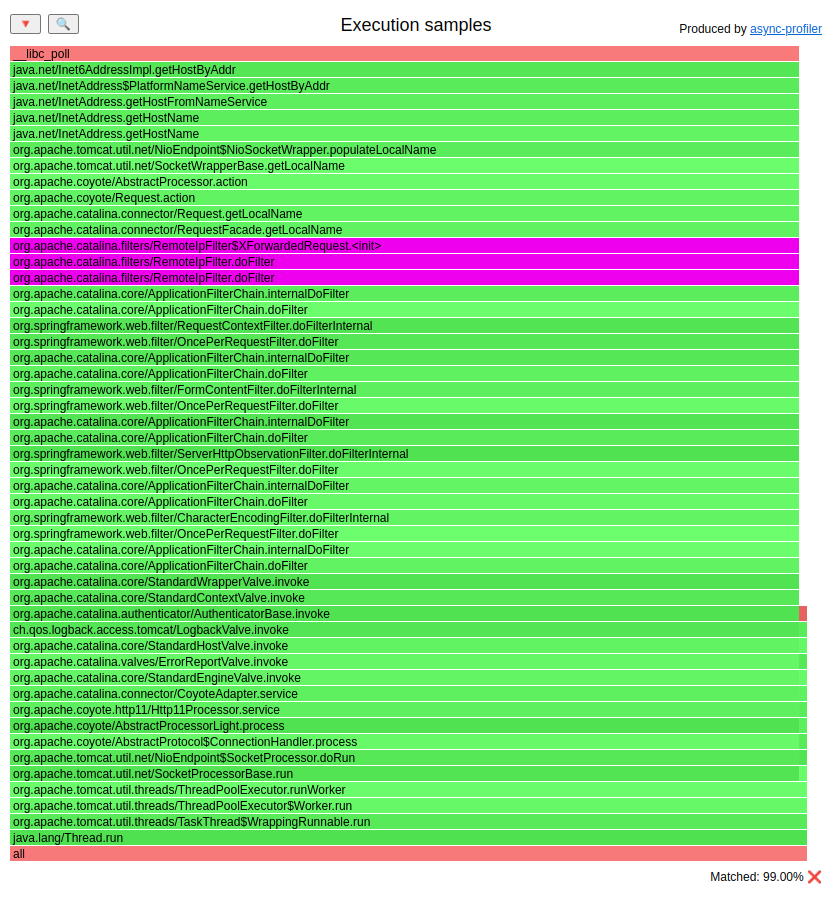

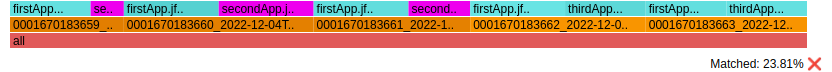

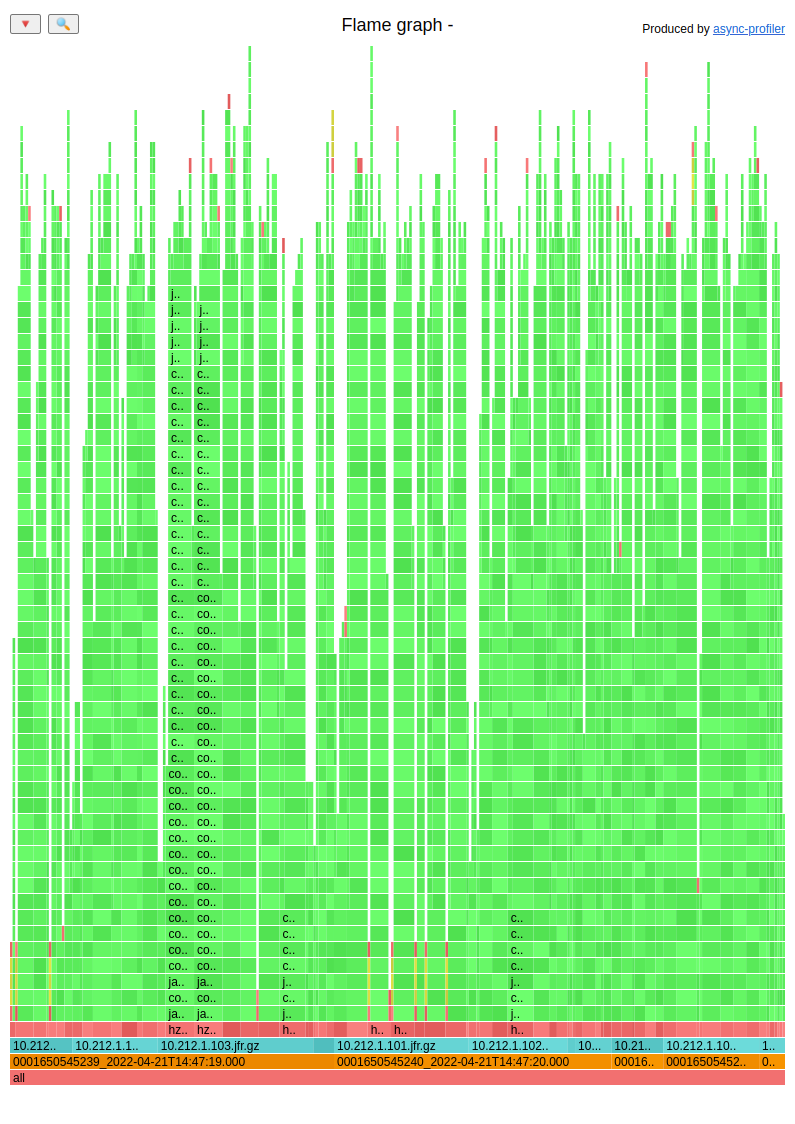

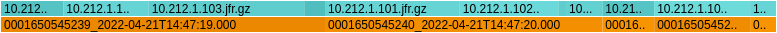

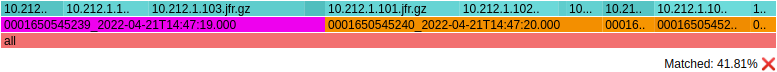

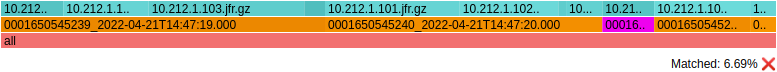

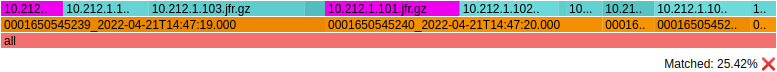

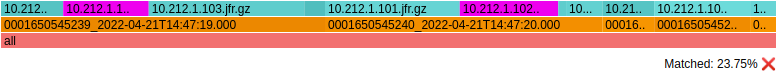

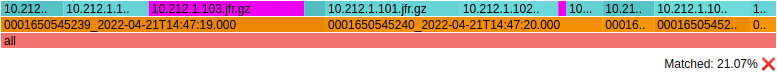

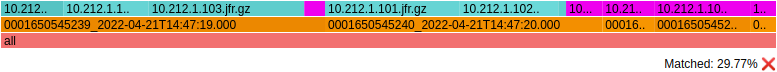

Let’s load the JFR into my viewer and look at the flame graph of the whole application: (HTML)

It’s hard to guess why some requests are slower than others. We can see two different methods executed at the top of the flame graph:

matrixMultiplySlow()matrixMultiplyFaster()

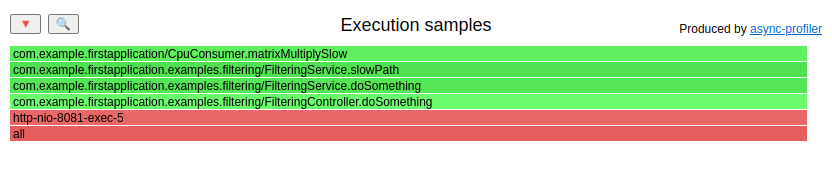

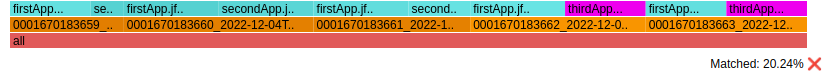

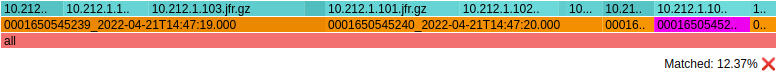

We cannot conclude from that which one is responsible for worse latency. Let’s add filters to that graph to understand the latency of the second request from pasted access log:

[05/Dec/2022:18:41:57 +0100] [GET /examples/filtering/ HTTP/1.0] [200] [2779 ms] [http-nio-8081-exec-5]

- Access log filter:

- End date -

05/Dec/2022:18:41:57 +0100 - End date format - let’s keep the default one

- Duration -

2779 - Locale language -

EN

- End date -

- Thread filter -

http-nio-8081-exec-5

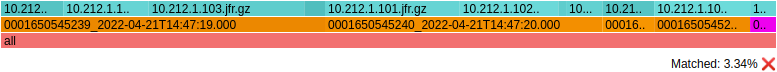

Now the flame graph is obvious: (HTML)

We can check this for a few more requests and figure out the following:

- In every slow request, we executed

matrixMultiplySlow() - In every fast request, we executed

matrixMultiplyFaster()

This technique is great for dealing with the tail of the latency: We can focus our work on the longest operations. That may lead us to some nice fixes.

Real-life example - DNS

The point of the previous example was to show you why aggregated results can be useless for tracing a single request. Now I want to show you a widespread issue I have diagnosed a few times.

Spring Boot has a commonly used addition called Actuator. One of the

features of the Actuator is the health check endpoint. Under URI /actuator/health, you can get JSON

with information about the health of your application. That endpoint is sometimes used as a load

balancer probe. Let’s consider a multi-node cluster of our example application with a load

balancer in front of the cluster, which:

- probes the actuator if the application is alive, expecting

"status" : "UP"in the response JSON - timeouts the probe after 1 second

Now, I will do one hack in my local configuration to make this example work. It will be explained at the end of this example.

Let’s find out what our IP is:

$ ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9001

inet 172.31.36.53 netmask 255.255.240.0 broadcast 172.31.47.255

Let’s probe an actuator by this IP, not a localhost (without the hack described later, you cannot get the same results):

./profiler.sh start -e wall -f actuator.jfr first-application-0.0.1-SNAPSHOT.jar

ab -n 1000 http://172.31.36.53:8081/actuator/health # check your IP

./profiler.sh stop -f actuator.jfr first-application-0.0.1-SNAPSHOT.jar

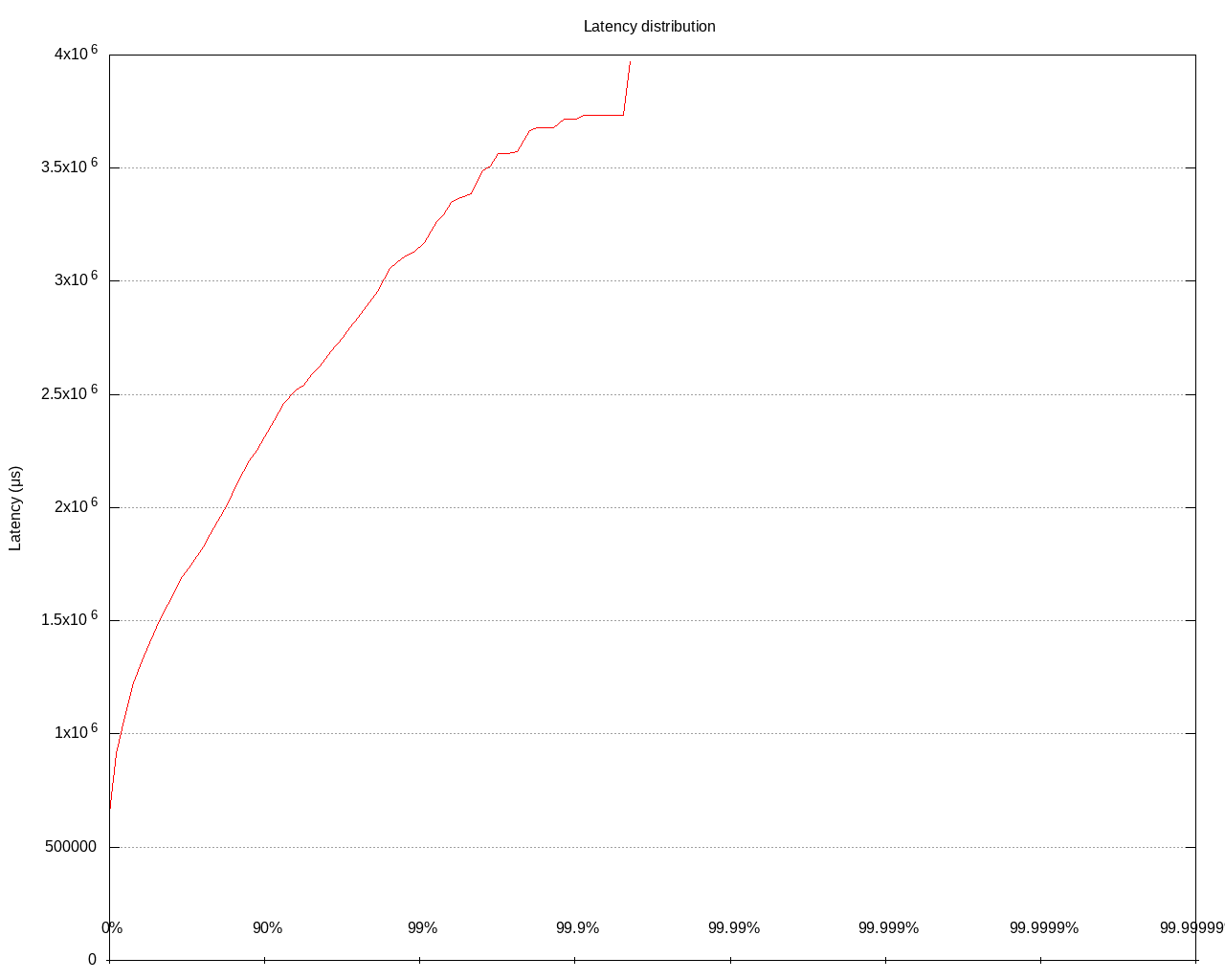

The result of ab:

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.0 0 0

Processing: 0 5 158.1 0 5000

Waiting: 0 5 158.1 0 5000

Total: 0 5 158.1 0 5000

Percentage of the requests served within a specific time (ms)

50% 0

66% 0

75% 0

80% 0

90% 0

95% 0

98% 0

99% 1

100% 5000 (longest request)

So almost all the actuator endpoints returned in 0ms, but at least one lasted 5s. We can see one longer request in the access logs:

[08/DEC/2022:08:56:24 +0000] [GET /actuator/health HTTP/1.0] [200] [0 ms] [http-nio-8081-exec-7]

[08/DEC/2022:08:56:24 +0000] [GET /actuator/health HTTP/1.0] [200] [0 ms] [http-nio-8081-exec-10]

[08/DEC/2022:08:56:24 +0000] [GET /actuator/health HTTP/1.0] [200] [0 ms] [http-nio-8081-exec-3]